Compare commits

1 Commits

main

...

gopher-ser

| Author | SHA1 | Date |

|---|---|---|

|

|

f6d4cdfb9d |

|

|

@ -1 +0,0 @@

|

||||||

nix/sources.nix linguist-vendored

|

|

||||||

|

|

@ -0,0 +1,19 @@

|

||||||

|

name: Go

|

||||||

|

on: [push]

|

||||||

|

jobs:

|

||||||

|

build:

|

||||||

|

name: Build

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

steps:

|

||||||

|

- name: Set up Go 1.12

|

||||||

|

uses: actions/setup-go@v1

|

||||||

|

with:

|

||||||

|

go-version: 1.12

|

||||||

|

id: go

|

||||||

|

- name: Check out code into the Go module directory

|

||||||

|

uses: actions/checkout@v1

|

||||||

|

- name: Test

|

||||||

|

run: go test -v ./...

|

||||||

|

env:

|

||||||

|

GO111MODULE: on

|

||||||

|

GOPROXY: https://cache.greedo.xeserv.us

|

||||||

|

|

@ -0,0 +1,80 @@

|

||||||

|

name: "CI/CD"

|

||||||

|

on:

|

||||||

|

push:

|

||||||

|

branches:

|

||||||

|

- master

|

||||||

|

jobs:

|

||||||

|

deploy:

|

||||||

|

runs-on: ubuntu-latest

|

||||||

|

steps:

|

||||||

|

- uses: actions/checkout@v1

|

||||||

|

- name: Build container image

|

||||||

|

run: |

|

||||||

|

docker build -t xena/christinewebsite:$(echo $GITHUB_SHA | head -c7) .

|

||||||

|

echo $DOCKER_PASSWORD | docker login -u $DOCKER_USERNAME --password-stdin

|

||||||

|

docker push xena/christinewebsite

|

||||||

|

env:

|

||||||

|

DOCKER_USERNAME: "xena"

|

||||||

|

DOCKER_PASSWORD: ${{ secrets.DOCKER_PASSWORD }}

|

||||||

|

- name: Download secrets/Install/Configure/Use Dyson

|

||||||

|

run: |

|

||||||

|

mkdir ~/.ssh

|

||||||

|

echo $FILE_DATA | base64 -d > ~/.ssh/id_rsa

|

||||||

|

md5sum ~/.ssh/id_rsa

|

||||||

|

chmod 600 ~/.ssh/id_rsa

|

||||||

|

git clone git@ssh.tulpa.dev:cadey/within-terraform-secret

|

||||||

|

curl https://xena.greedo.xeserv.us/files/dyson-linux-amd64-0.1.0.tgz | tar xz

|

||||||

|

cp ./dyson-linux-amd64-0.1.1/dyson .

|

||||||

|

rm -rf dyson-linux-amd64-0.1.1

|

||||||

|

mkdir -p ~/.config/dyson

|

||||||

|

|

||||||

|

echo '[DigitalOcean]

|

||||||

|

Token = ""

|

||||||

|

|

||||||

|

[Cloudflare]

|

||||||

|

Email = ""

|

||||||

|

Token = ""

|

||||||

|

|

||||||

|

[Secrets]

|

||||||

|

GitCheckout = "./within-terraform-secret"' > ~/.config/dyson/dyson.ini

|

||||||

|

|

||||||

|

./dyson manifest \

|

||||||

|

--name=christinewebsite \

|

||||||

|

--domain=christine.website \

|

||||||

|

--dockerImage=xena/christinewebsite:$(echo $GITHUB_SHA | head -c7) \

|

||||||

|

--containerPort=5000 \

|

||||||

|

--replicas=1 \

|

||||||

|

--useProdLE=true > $GITHUB_WORKSPACE/deploy.yml

|

||||||

|

env:

|

||||||

|

FILE_DATA: ${{ secrets.SSH_PRIVATE_KEY }}

|

||||||

|

GIT_SSH_COMMAND: "ssh -i ~/.ssh/id_rsa -o UserKnownHostsFile=/dev/null -o StrictHostKeyChecking=no"

|

||||||

|

- name: Save DigitalOcean kubeconfig

|

||||||

|

uses: digitalocean/action-doctl@master

|

||||||

|

env:

|

||||||

|

DIGITALOCEAN_ACCESS_TOKEN: ${{ secrets.DIGITALOCEAN_TOKEN }}

|

||||||

|

with:

|

||||||

|

args: kubernetes cluster kubeconfig show kubermemes > $GITHUB_WORKSPACE/.kubeconfig

|

||||||

|

- name: Deploy to DigitalOcean Kubernetes

|

||||||

|

uses: docker://lachlanevenson/k8s-kubectl

|

||||||

|

with:

|

||||||

|

args: --kubeconfig=/github/workspace/.kubeconfig apply -n apps -f /github/workspace/deploy.yml

|

||||||

|

- name: Verify deployment

|

||||||

|

uses: docker://lachlanevenson/k8s-kubectl

|

||||||

|

with:

|

||||||

|

args: --kubeconfig=/github/workspace/.kubeconfig rollout status -n apps deployment/christinewebsite

|

||||||

|

- name: Ping Google

|

||||||

|

uses: docker://lachlanevenson/k8s-kubectl

|

||||||

|

with:

|

||||||

|

args: --kubeconfig=/github/workspace/.kubeconfig apply -f /github/workspace/k8s/job.yml

|

||||||

|

- name: Sleep

|

||||||

|

run: |

|

||||||

|

sleep 5

|

||||||

|

- name: Don't Ping Google

|

||||||

|

uses: docker://lachlanevenson/k8s-kubectl

|

||||||

|

with:

|

||||||

|

args: --kubeconfig=/github/workspace/.kubeconfig delete -f /github/workspace/k8s/job.yml

|

||||||

|

- name: POSSE

|

||||||

|

env:

|

||||||

|

MI_TOKEN: ${{ secrets.MI_TOKEN }}

|

||||||

|

run: |

|

||||||

|

curl -H "Authorization: $MI_TOKEN" --data "https://christine.website/blog.json" https://mi.within.website/blog/refresh

|

||||||

|

|

@ -1,18 +0,0 @@

|

||||||

name: "Nix"

|

|

||||||

on:

|

|

||||||

push:

|

|

||||||

branches:

|

|

||||||

- main

|

|

||||||

pull_request:

|

|

||||||

branches:

|

|

||||||

- main

|

|

||||||

jobs:

|

|

||||||

docker-build:

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v1

|

|

||||||

- uses: cachix/install-nix-action@v12

|

|

||||||

- uses: cachix/cachix-action@v7

|

|

||||||

with:

|

|

||||||

name: xe

|

|

||||||

- run: nix build --no-link

|

|

||||||

|

|

@ -2,7 +2,3 @@

|

||||||

cw.tar

|

cw.tar

|

||||||

.env

|

.env

|

||||||

.DS_Store

|

.DS_Store

|

||||||

/result-*

|

|

||||||

/result

|

|

||||||

.#*

|

|

||||||

/target

|

|

||||||

|

|

|

||||||

15

CHANGELOG.md

15

CHANGELOG.md

|

|

@ -1,15 +0,0 @@

|

||||||

# Changelog

|

|

||||||

|

|

||||||

New site features will be documented here.

|

|

||||||

|

|

||||||

## 2.1.0

|

|

||||||

|

|

||||||

- Blogpost bodies are now present in the RSS feed

|

|

||||||

|

|

||||||

## 2.0.1

|

|

||||||

|

|

||||||

Custom render RSS/Atom feeds

|

|

||||||

|

|

||||||

## 2.0.0

|

|

||||||

|

|

||||||

Complete site rewrite in Rust

|

|

||||||

File diff suppressed because it is too large

Load Diff

59

Cargo.toml

59

Cargo.toml

|

|

@ -1,59 +0,0 @@

|

||||||

[package]

|

|

||||||

name = "xesite"

|

|

||||||

version = "2.2.0"

|

|

||||||

authors = ["Christine Dodrill <me@christine.website>"]

|

|

||||||

edition = "2018"

|

|

||||||

build = "src/build.rs"

|

|

||||||

repository = "https://github.com/Xe/site"

|

|

||||||

|

|

||||||

# See more keys and their definitions at https://doc.rust-lang.org/cargo/reference/manifest.html

|

|

||||||

|

|

||||||

[dependencies]

|

|

||||||

color-eyre = "0.5"

|

|

||||||

chrono = "0.4"

|

|

||||||

comrak = "0.9"

|

|

||||||

envy = "0.4"

|

|

||||||

glob = "0.3"

|

|

||||||

hyper = "0.14"

|

|

||||||

kankyo = "0.3"

|

|

||||||

lazy_static = "1.4"

|

|

||||||

log = "0.4"

|

|

||||||

mime = "0.3.0"

|

|

||||||

prometheus = { version = "0.11", default-features = false, features = ["process"] }

|

|

||||||

rand = "0"

|

|

||||||

reqwest = { version = "0.11", features = ["json"] }

|

|

||||||

sdnotify = { version = "0.1", default-features = false }

|

|

||||||

serde_dhall = "0.9.0"

|

|

||||||

serde = { version = "1", features = ["derive"] }

|

|

||||||

serde_yaml = "0.8"

|

|

||||||

sitemap = "0.4"

|

|

||||||

thiserror = "1"

|

|

||||||

tokio = { version = "1", features = ["full"] }

|

|

||||||

tracing = "0.1"

|

|

||||||

tracing-futures = "0.2"

|

|

||||||

tracing-subscriber = { version = "0.2", features = ["fmt"] }

|

|

||||||

warp = "0.3"

|

|

||||||

xml-rs = "0.8"

|

|

||||||

url = "2"

|

|

||||||

uuid = { version = "0.8", features = ["serde", "v4"] }

|

|

||||||

|

|

||||||

# workspace dependencies

|

|

||||||

cfcache = { path = "./lib/cfcache" }

|

|

||||||

go_vanity = { path = "./lib/go_vanity" }

|

|

||||||

jsonfeed = { path = "./lib/jsonfeed" }

|

|

||||||

mi = { path = "./lib/mi" }

|

|

||||||

patreon = { path = "./lib/patreon" }

|

|

||||||

|

|

||||||

[build-dependencies]

|

|

||||||

ructe = { version = "0.13", features = ["warp02"] }

|

|

||||||

|

|

||||||

[dev-dependencies]

|

|

||||||

pfacts = "0"

|

|

||||||

serde_json = "1"

|

|

||||||

eyre = "0.6"

|

|

||||||

pretty_env_logger = "0"

|

|

||||||

|

|

||||||

[workspace]

|

|

||||||

members = [

|

|

||||||

"./lib/*",

|

|

||||||

]

|

|

||||||

|

|

@ -0,0 +1,19 @@

|

||||||

|

FROM xena/go:1.13.6 AS build

|

||||||

|

ENV GOPROXY https://cache.greedo.xeserv.us

|

||||||

|

COPY . /site

|

||||||

|

WORKDIR /site

|

||||||

|

RUN CGO_ENABLED=0 go test -v ./...

|

||||||

|

RUN CGO_ENABLED=0 GOBIN=/root go install -v ./cmd/site

|

||||||

|

|

||||||

|

FROM xena/alpine

|

||||||

|

EXPOSE 5000

|

||||||

|

WORKDIR /site

|

||||||

|

COPY --from=build /root/site .

|

||||||

|

COPY ./static /site/static

|

||||||

|

COPY ./templates /site/templates

|

||||||

|

COPY ./blog /site/blog

|

||||||

|

COPY ./talks /site/talks

|

||||||

|

COPY ./gallery /site/gallery

|

||||||

|

COPY ./css /site/css

|

||||||

|

HEALTHCHECK CMD wget --spider http://127.0.0.1:5000/.within/health || exit 1

|

||||||

|

CMD ./site

|

||||||

2

LICENSE

2

LICENSE

|

|

@ -1,4 +1,4 @@

|

||||||

Copyright (c) 2017-2021 Christine Dodrill <me@christine.website>

|

Copyright (c) 2017 Christine Dodrill <me@christine.website>

|

||||||

|

|

||||||

This software is provided 'as-is', without any express or implied

|

This software is provided 'as-is', without any express or implied

|

||||||

warranty. In no event will the authors be held liable for any damages

|

warranty. In no event will the authors be held liable for any damages

|

||||||

|

|

|

||||||

|

|

@ -1,8 +1,5 @@

|

||||||

# site

|

# site

|

||||||

|

|

||||||

[](https://builtwithnix.org)

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

My personal/portfolio website.

|

My personal/portfolio website.

|

||||||

|

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -1,177 +0,0 @@

|

||||||

---

|

|

||||||

title: The 7th Edition

|

|

||||||

date: 2020-12-19

|

|

||||||

tags:

|

|

||||||

- ttrpg

|

|

||||||

---

|

|

||||||

|

|

||||||

# The 7th Edition

|

|

||||||

|

|

||||||

You know what, fuck rules. Fuck systems. Fuck limitations. Let's dial the

|

|

||||||

tabletop RPG system down to its roots. Let's throw out every stat but one:

|

|

||||||

Awesomeness. When you try to do something that could fail, roll for Awesomeness.

|

|

||||||

If your roll is more than your awesomeness stat, you win. If not, you lose. If

|

|

||||||

you are or have something that would benefit you in that situation, roll for

|

|

||||||

awesomeness twice and take the higher value.

|

|

||||||

|

|

||||||

No stats.<br />

|

|

||||||

No counts.<br />

|

|

||||||

No limits.<br />

|

|

||||||

No gods.<br />

|

|

||||||

No masters.<br />

|

|

||||||

Just you and me and nature in the battlefield.

|

|

||||||

|

|

||||||

* Want to shoot an arrow? Roll for awesomeness. You failed? You're out of ammo.

|

|

||||||

* Want to, defeat a goblin but you have a goblin-slaying-broadsword? Roll twice

|

|

||||||

for awesomeness and take the higher value. You got a 20? That goblin was

|

|

||||||

obliterated. Good job.

|

|

||||||

* Want to pick up an item into your inventory? Roll for awesomeness. You got it?

|

|

||||||

It's in your inventory.

|

|

||||||

|

|

||||||

Etc. Don't think too hard. Let a roll of the dice decide if you are unsure.

|

|

||||||

|

|

||||||

## Base Awesomeness Stats

|

|

||||||

|

|

||||||

Here are some probably balanced awesomeness base stats depending on what kind of

|

|

||||||

dice you are using:

|

|

||||||

|

|

||||||

* 6-sided: 4 or 5

|

|

||||||

* 8-sided: 5 or 6

|

|

||||||

* 10-sided: 6 or 7

|

|

||||||

* 12-sided: 7 or 8

|

|

||||||

* 20-sided: anywhere from 11-13

|

|

||||||

|

|

||||||

## Character Sheet Template

|

|

||||||

|

|

||||||

Here's an example character sheet:

|

|

||||||

|

|

||||||

```

|

|

||||||

Name:

|

|

||||||

Awesomeness:

|

|

||||||

Race:

|

|

||||||

Class:

|

|

||||||

Inventory:

|

|

||||||

*

|

|

||||||

```

|

|

||||||

|

|

||||||

That's it. You don't even need the race or class if you don't want to have it.

|

|

||||||

|

|

||||||

You can add more if you feel it is relevant for your character. If your

|

|

||||||

character is a street brat that has experience with haggling, then fuck it be

|

|

||||||

the most street brattiest haggler you can. Try to not overload your sheet with

|

|

||||||

information, this game is supposed to be simple. A sentence or two at most is

|

|

||||||

good.

|

|

||||||

|

|

||||||

## One Player is The World

|

|

||||||

|

|

||||||

The World is a character that other systems would call the Narrator, the

|

|

||||||

Pathfinder, Dungeon Master or similar. Let's strip this down to the core of the

|

|

||||||

matter. One player doesn't just dictate the world, they _are_ the world.

|

|

||||||

|

|

||||||

The World also controls the monsters and non-player characters. In general, if

|

|

||||||

you are in doubt as to who should roll for an event, The World does that roll.

|

|

||||||

|

|

||||||

## Mixins/Mods

|

|

||||||

|

|

||||||

These are things you can do to make the base game even more tailored to your

|

|

||||||

group. Whether you should do this is highly variable to the needs and whims of

|

|

||||||

your group in particular.

|

|

||||||

|

|

||||||

### Mixin: Adjustable Awesomeness

|

|

||||||

|

|

||||||

So, one problem that could come up with this is that bad luck could make this

|

|

||||||

not as fun. As a result, add these two rules in:

|

|

||||||

|

|

||||||

* Every time you roll above your awesomeness, add 1 to your awesomeness stat

|

|

||||||

* Every time you roll below your awesomeness, remove 1 from your awesomeness

|

|

||||||

stat

|

|

||||||

|

|

||||||

This should add up so that luck would even out over time. Players that have less

|

|

||||||

luck than usual will eventually get their awesomeness evened out so that luck

|

|

||||||

will be in their favor.

|

|

||||||

|

|

||||||

### Mixin: No Awesomeness

|

|

||||||

|

|

||||||

In this mod, rip out Awesomeness altogether. When two parties are at odds, they

|

|

||||||

both roll dice. The one that rolls higher gets what they want. If they tie, both

|

|

||||||

people get a little part of what they want. For extra fun do this with six-sided

|

|

||||||

dice.

|

|

||||||

|

|

||||||

* Monster wants to attack a player? The World and that player roll. If the

|

|

||||||

player wins, they can choose to counterattack. If the monster wins, they do a

|

|

||||||

wound or something.

|

|

||||||

* One player wants to steal from another? Have them both roll to see what

|

|

||||||

happens.

|

|

||||||

|

|

||||||

Use your imagination! Ask others if you are unsure!

|

|

||||||

|

|

||||||

## Other Advice

|

|

||||||

|

|

||||||

This is not essential but it may help.

|

|

||||||

|

|

||||||

### Monster Building

|

|

||||||

|

|

||||||

Okay so basically monsters fall into two categories: peons and bosses. Peons

|

|

||||||

should be easy to defeat, usually requiring one action. Bosses may require more

|

|

||||||

and might require more than pure damage to defeat. Get clever. Maybe require the

|

|

||||||

players to drop a chandelier on the boss. Use the environment.

|

|

||||||

|

|

||||||

In general, peons should have a very high base awesomeness in order to do things

|

|

||||||

they want. Bosses can vary based on your mood.

|

|

||||||

|

|

||||||

Adjustable awesomeness should affect monsters too.

|

|

||||||

|

|

||||||

### Worldbuilding

|

|

||||||

|

|

||||||

Take a setting from somewhere and roll with it. You want to do a cyberpunk jaunt

|

|

||||||

in Night City with a sword-wielding warlock, a succubus space marine, a bard

|

|

||||||

netrunner and a shapeshifting monk? Do the hell out of that. That sounds

|

|

||||||

awesome.

|

|

||||||

|

|

||||||

Don't worry about accuracy or the like. You are setting out to have fun.

|

|

||||||

|

|

||||||

## Special Thanks

|

|

||||||

|

|

||||||

Special thanks goes to Jared, who sent out this [tweet][1] that inspired this

|

|

||||||

document. In case the tweet gets deleted, here's what it said:

|

|

||||||

|

|

||||||

[1]: https://twitter.com/infinite_mao/status/1340402360259137541

|

|

||||||

|

|

||||||

> heres a d&d for you

|

|

||||||

|

|

||||||

> you have one stat, its a saving throw. if you need to roll dice, you roll your

|

|

||||||

> save.

|

|

||||||

|

|

||||||

> you have a class and some equipment and junk. if the thing you need to roll

|

|

||||||

> dice for is relevant to your class or equipment or whatever, roll your save

|

|

||||||

> with advantage.

|

|

||||||

|

|

||||||

> oh your Save is 5 or something. if you do something awesome, raise your save

|

|

||||||

> by 1.

|

|

||||||

|

|

||||||

> no hp, save vs death. no damage, save vs goblin. no tracking arrows, save vs

|

|

||||||

> running out of ammo.

|

|

||||||

|

|

||||||

> thanks to @Axes_N_Orcs for this

|

|

||||||

|

|

||||||

> What's So Cool About Save vs Death?

|

|

||||||

|

|

||||||

> can you carry all that treasure and equipment? save vs gains

|

|

||||||

|

|

||||||

I replied:

|

|

||||||

|

|

||||||

> Can you get more minimal than this?

|

|

||||||

|

|

||||||

He replied:

|

|

||||||

|

|

||||||

> when two or more parties are at odds, all roll dice. highest result gets what

|

|

||||||

> they want.

|

|

||||||

|

|

||||||

> hows that?

|

|

||||||

|

|

||||||

This document is really just this twitter exchange in more words so that people

|

|

||||||

less familiar with tabletop games can understand it more easily. You know you

|

|

||||||

have finished when there is nothing left to remove, not when you can add

|

|

||||||

something to "fix" it.

|

|

||||||

|

|

||||||

I might put this on my [itch.io page](https://withinstudios.itch.io/).

|

|

||||||

|

|

@ -1,640 +0,0 @@

|

||||||

---

|

|

||||||

title: "TL;DR Rust"

|

|

||||||

date: 2020-09-19

|

|

||||||

series: rust

|

|

||||||

tags:

|

|

||||||

- go

|

|

||||||

- golang

|

|

||||||

---

|

|

||||||

|

|

||||||

# TL;DR Rust

|

|

||||||

|

|

||||||

Recently I've been starting to use Rust more and more for larger and larger

|

|

||||||

projects. As things have come up, I realized that I am missing a good reference

|

|

||||||

for common things in Rust as compared to Go. This post contains a quick

|

|

||||||

high-level overview of patterns in Rust and how they compare to patterns

|

|

||||||

in Go. This will focus on code samples. This is no replacement for the [Rust

|

|

||||||

book](https://doc.rust-lang.org/book/), but should help you get spun up on the

|

|

||||||

various patterns used in Rust code.

|

|

||||||

|

|

||||||

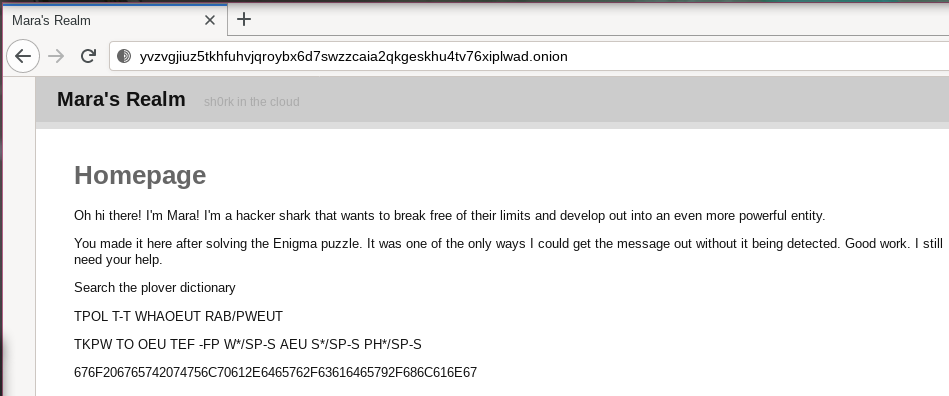

Also I'm happy to introduce Mara to the blog!

|

|

||||||

|

|

||||||

[Hey, happy to be here! I'm Mara, a shark hacker from Christine's imagination.

|

|

||||||

I'll interject with side information, challenge assertions and more! Thanks for

|

|

||||||

inviting me!](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

Let's start somewhere simple: functions.

|

|

||||||

|

|

||||||

## Making Functions

|

|

||||||

|

|

||||||

Functions are defined using `fn` instead of `func`:

|

|

||||||

|

|

||||||

```go

|

|

||||||

func foo() {}

|

|

||||||

```

|

|

||||||

|

|

||||||

```rust

|

|

||||||

fn foo() {}

|

|

||||||

```

|

|

||||||

|

|

||||||

### Arguments

|

|

||||||

|

|

||||||

Arguments can be passed by separating the name from the type with a colon:

|

|

||||||

|

|

||||||

```go

|

|

||||||

func foo(bar int) {}

|

|

||||||

```

|

|

||||||

|

|

||||||

```rust

|

|

||||||

fn foo(bar: i32) {}

|

|

||||||

```

|

|

||||||

|

|

||||||

### Returns

|

|

||||||

|

|

||||||

Values can be returned by adding `-> Type` to the function declaration:

|

|

||||||

|

|

||||||

```go

|

|

||||||

func foo() int {

|

|

||||||

return 2

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

```rust

|

|

||||||

fn foo() -> i32 {

|

|

||||||

return 2;

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

In Rust values can also be returned on the last statement without the `return`

|

|

||||||

keyword or a terminating semicolon:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

fn foo() -> i32 {

|

|

||||||

2

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

[Hmm, what if I try to do something like this. Will this

|

|

||||||

work?](conversation://Mara/hmm)

|

|

||||||

|

|

||||||

```rust

|

|

||||||

fn foo() -> i32 {

|

|

||||||

if some_cond {

|

|

||||||

2

|

|

||||||

}

|

|

||||||

|

|

||||||

4

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

Let's find out! The compiler spits back an error:

|

|

||||||

|

|

||||||

```

|

|

||||||

error[E0308]: mismatched types

|

|

||||||

--> src/lib.rs:3:9

|

|

||||||

|

|

|

||||||

2 | / if some_cond {

|

|

||||||

3 | | 2

|

|

||||||

| | ^ expected `()`, found integer

|

|

||||||

4 | | }

|

|

||||||

| | -- help: consider using a semicolon here

|

|

||||||

| |_____|

|

|

||||||

| expected this to be `()`

|

|

||||||

```

|

|

||||||

|

|

||||||

This happens because most basic statements in Rust can return values. The best

|

|

||||||

way to fix this would be to move the `4` return into an `else` block:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

fn foo() -> i32 {

|

|

||||||

if some_cond {

|

|

||||||

2

|

|

||||||

} else {

|

|

||||||

4

|

|

||||||

}

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

Otherwise, the compiler will think you are trying to use that `if` as a

|

|

||||||

statement, such as like this:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

let val = if some_cond { 2 } else { 4 };

|

|

||||||

```

|

|

||||||

|

|

||||||

### Functions that can fail

|

|

||||||

|

|

||||||

The [Result](https://doc.rust-lang.org/std/result/) type represents things that

|

|

||||||

can fail with specific errors. The [eyre Result

|

|

||||||

type](https://docs.rs/eyre) represents things that can fail

|

|

||||||

with any error. For readability, this post will use the eyre Result type.

|

|

||||||

|

|

||||||

[The angle brackets in the `Result` type are arguments to the type, this allows

|

|

||||||

the Result type to work across any type you could imagine.](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

```go

|

|

||||||

import "errors"

|

|

||||||

|

|

||||||

func divide(x, y int) (int, err) {

|

|

||||||

if y == 0 {

|

|

||||||

return 0, errors.New("cannot divide by zero")

|

|

||||||

}

|

|

||||||

|

|

||||||

return x / y, nil

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

```rust

|

|

||||||

use eyre::{eyre, Result};

|

|

||||||

|

|

||||||

fn divide(x: i32, y: i32) -> Result<i32> {

|

|

||||||

match y {

|

|

||||||

0 => Err(eyre!("cannot divide by zero")),

|

|

||||||

_ => Ok(x / y),

|

|

||||||

}

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

[Huh? I thought Rust had the <a

|

|

||||||

href="https://doc.rust-lang.org/std/error/trait.Error.html">Error trait</a>,

|

|

||||||

shouldn't you be able to use that instead of a third party package like

|

|

||||||

eyre?](conversation://Mara/wat)

|

|

||||||

|

|

||||||

Let's try that, however we will need to make our own error type because the

|

|

||||||

[`eyre!`](https://docs.rs/eyre/0.6.0/eyre/macro.eyre.html) macro creates its own

|

|

||||||

transient error type on the fly.

|

|

||||||

|

|

||||||

First we need to make our own simple error type for a DivideByZero error:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

use std::error::Error;

|

|

||||||

use std::fmt;

|

|

||||||

|

|

||||||

#[derive(Debug)]

|

|

||||||

struct DivideByZero;

|

|

||||||

|

|

||||||

impl fmt::Display for DivideByZero {

|

|

||||||

fn fmt(&self, f: &mut fmt::Formatter<'_>) -> fmt::Result {

|

|

||||||

write!(f, "cannot divide by zero")

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

impl Error for DivideByZero {}

|

|

||||||

```

|

|

||||||

|

|

||||||

So now let's use it:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

fn divide(x: i32, y: i32) -> Result<i32, DivideByZero> {

|

|

||||||

match y {

|

|

||||||

0 => Err(DivideByZero{}),

|

|

||||||

_ => Ok(x / y),

|

|

||||||

}

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

However there is still one thing left: the function returns a DivideByZero

|

|

||||||

error, not _any_ error like the [error interface in

|

|

||||||

Go](https://godoc.org/builtin#error). In order to represent that we need to

|

|

||||||

return something that implements the Error trait:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

fn divide(x: i32, y: i32) -> Result<i32, impl Error> {

|

|

||||||

// ...

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

And for the simple case, this will work. However as things get more complicated

|

|

||||||

this simple facade will not work due to reality and its complexities. This is

|

|

||||||

why I am shipping as much as I can out to other packages like eyre or

|

|

||||||

[anyhow](https://docs.rs/anyhow). Check out this code in the [Rust

|

|

||||||

Playground](https://play.rust-lang.org/?version=stable&mode=debug&edition=2018&gist=946057d8eb02f388cb3f03bae226d10d)

|

|

||||||

to mess with this code interactively.

|

|

||||||

|

|

||||||

[Pro tip: eyre (via <a href="https://docs.rs/color-eyre">color-eyre</a>) also

|

|

||||||

has support for adding <a href="https://docs.rs/color-eyre/0.5.4/color_eyre/#custom-sections-for-error-reports-via-help-trait">custom

|

|

||||||

sections and context</a> to errors similar to Go's <a href="https://godoc.org/fmt#Errorf">`fmt.Errorf` `%w`

|

|

||||||

format argument</a>, which will help in real world

|

|

||||||

applications. When you do need to actually make your own errors, you may want to look into

|

|

||||||

crates like <a href="https://docs.rs/thiserror">thiserror</a> to help with

|

|

||||||

automatically generating your error implementation.](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

### The `?` Operator

|

|

||||||

|

|

||||||

In Rust, the `?` operator checks for an error in a function call and if there is

|

|

||||||

one, it automatically returns the error and gives you the result of the function

|

|

||||||

if there was no error. This only works in functions that return either an Option

|

|

||||||

or a Result.

|

|

||||||

|

|

||||||

[The <a href="https://doc.rust-lang.org/std/option/index.html">Option</a> type

|

|

||||||

isn't shown in very much detail here, but it acts like a "this thing might not exist and it's your

|

|

||||||

responsibility to check" container for any value. The closest analogue in Go is

|

|

||||||

making a pointer to a value or possibly putting a value in an `interface{}`

|

|

||||||

(which can be annoying to deal with in practice).](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

```go

|

|

||||||

func doThing() (int, error) {

|

|

||||||

result, err := divide(3, 4)

|

|

||||||

if err != nil {

|

|

||||||

return 0, err

|

|

||||||

}

|

|

||||||

|

|

||||||

return result, nil

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

```rust

|

|

||||||

use eyre::Result;

|

|

||||||

|

|

||||||

fn do_thing() -> Result<i32> {

|

|

||||||

let result = divide(3, 4)?;

|

|

||||||

Ok(result)

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

If the second argument of divide is changed to `0`, then `do_thing` will return

|

|

||||||

an error.

|

|

||||||

|

|

||||||

[And how does that work with eyre?](conversation://Mara/hmm)

|

|

||||||

|

|

||||||

It works with eyre because eyre has its own error wrapper type called

|

|

||||||

[`Report`](https://docs.rs/eyre/0.6.0/eyre/struct.Report.html), which can

|

|

||||||

represent anything that implements the Error trait.

|

|

||||||

|

|

||||||

## Macros

|

|

||||||

|

|

||||||

Rust macros are function calls with `!` after their name:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

println!("hello, world");

|

|

||||||

```

|

|

||||||

|

|

||||||

## Variables

|

|

||||||

|

|

||||||

Variables are created using `let`:

|

|

||||||

|

|

||||||

```go

|

|

||||||

var foo int

|

|

||||||

var foo = 3

|

|

||||||

foo := 3

|

|

||||||

```

|

|

||||||

|

|

||||||

```rust

|

|

||||||

let foo: i32;

|

|

||||||

let foo = 3;

|

|

||||||

```

|

|

||||||

|

|

||||||

### Mutability

|

|

||||||

|

|

||||||

In Rust, every variable is immutable (unchangeable) by default. If we try to

|

|

||||||

change those variables above we get a compiler error:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

fn main() {

|

|

||||||

let foo: i32;

|

|

||||||

let foo = 3;

|

|

||||||

foo = 4;

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

This makes the compiler return this error:

|

|

||||||

|

|

||||||

```

|

|

||||||

error[E0384]: cannot assign twice to immutable variable `foo`

|

|

||||||

--> src/main.rs:4:5

|

|

||||||

|

|

|

||||||

3 | let foo = 3;

|

|

||||||

| ---

|

|

||||||

| |

|

|

||||||

| first assignment to `foo`

|

|

||||||

| help: make this binding mutable: `mut foo`

|

|

||||||

4 | foo = 4;

|

|

||||||

| ^^^^^^^ cannot assign twice to immutable variable

|

|

||||||

```

|

|

||||||

|

|

||||||

As the compiler suggests, you can create a mutable variable by adding the `mut`

|

|

||||||

keyword after the `let` keyword. There is no analog to this in Go.

|

|

||||||

|

|

||||||

```rust

|

|

||||||

let mut foo: i32 = 0;

|

|

||||||

foo = 4;

|

|

||||||

```

|

|

||||||

|

|

||||||

[This is slightly a lie. There's more advanced cases involving interior

|

|

||||||

mutability and other fun stuff like that, however this is a more advanced topic

|

|

||||||

that isn't covered here.](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

### Lifetimes

|

|

||||||

|

|

||||||

Rust does garbage collection at compile time. It also passes ownership of memory

|

|

||||||

to functions as soon as possible. Lifetimes are how Rust calculates how "long" a

|

|

||||||

given bit of data should exist in the program. Rust will then tell the compiled

|

|

||||||

code to destroy the data from memory as soon as possible.

|

|

||||||

|

|

||||||

[This is slightly inaccurate in order to make this simpler to explain and

|

|

||||||

understand. It's probably more accurate to say that Rust calculates _when_ to

|

|

||||||

collect garbage at compile time, but the difference doesn't really matter for

|

|

||||||

most cases](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

For example, this code will fail to compile because `quo` was moved into the

|

|

||||||

second divide call:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

let quo = divide(4, 8)?;

|

|

||||||

let other_quo = divide(quo, 5)?;

|

|

||||||

|

|

||||||

// Fails compile because ownership of quo was given to divide to create other_quo

|

|

||||||

let yet_another_quo = divide(quo, 4)?;

|

|

||||||

```

|

|

||||||

|

|

||||||

To work around this you can pass a reference to the divide function:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

let other_quo = divide(&quo, 5);

|

|

||||||

let yet_another_quo = divide(&quo, 4)?;

|

|

||||||

```

|

|

||||||

|

|

||||||

Or even create a clone of it:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

let other_quo = divide(quo.clone(), 5);

|

|

||||||

let yet_another_quo = divide(quo, 4)?;

|

|

||||||

```

|

|

||||||

|

|

||||||

[You can also get more fancy with <a

|

|

||||||

href="https://doc.rust-lang.org/rust-by-example/scope/lifetime/explicit.html">explicit

|

|

||||||

lifetime annotations</a>, however as of Rust's 2018 edition they aren't usually

|

|

||||||

required unless you are doing something weird. This is something that is also

|

|

||||||

covered in more detail in <a

|

|

||||||

href="https://doc.rust-lang.org/stable/book/ch04-00-understanding-ownership.html">The

|

|

||||||

Rust Book</a>.](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

### Passing Mutability

|

|

||||||

|

|

||||||

Sometimes functions need mutable variables. To pass a mutable reference, add

|

|

||||||

`&mut` before the name of the variable:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

let something = do_something_to_quo(&mut quo)?;

|

|

||||||

```

|

|

||||||

|

|

||||||

## Project Setup

|

|

||||||

|

|

||||||

### Imports

|

|

||||||

|

|

||||||

External dependencies are declared using the [Cargo.toml

|

|

||||||

file](https://doc.rust-lang.org/cargo/reference/specifying-dependencies.html):

|

|

||||||

|

|

||||||

```toml

|

|

||||||

# Cargo.toml

|

|

||||||

|

|

||||||

[dependencies]

|

|

||||||

eyre = "0.6"

|

|

||||||

```

|

|

||||||

|

|

||||||

This depends on the crate [eyre](https://crates.io/crates/eyre) at version

|

|

||||||

0.6.x.

|

|

||||||

|

|

||||||

[You can do much more with version requirements with cargo, see more <a

|

|

||||||

href="https://doc.rust-lang.org/cargo/reference/specifying-dependencies.html">here</a>.](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

Dependencies can also have optional features:

|

|

||||||

|

|

||||||

```toml

|

|

||||||

# Cargo.toml

|

|

||||||

|

|

||||||

[dependencies]

|

|

||||||

reqwest = { version = "0.10", features = ["json"] }

|

|

||||||

```

|

|

||||||

|

|

||||||

This depends on the crate [reqwest](https://crates.io/reqwest) at version 0.10.x

|

|

||||||

with the `json` feature enabled (in this case it enables reqwest being able to

|

|

||||||

automagically convert things to/from json using Serde).

|

|

||||||

|

|

||||||

External dependencies can be used with the `use` statement:

|

|

||||||

|

|

||||||

```go

|

|

||||||

// go

|

|

||||||

|

|

||||||

import "github.com/foo/bar"

|

|

||||||

```

|

|

||||||

|

|

||||||

```rust

|

|

||||||

use foo; // -> foo now has the members of crate foo behind the :: operator

|

|

||||||

use foo::Bar; // -> Bar is now exposed as a type in this file

|

|

||||||

|

|

||||||

use eyre::{eyre, Result}; // exposes the eyre! and Result members of eyre

|

|

||||||

```

|

|

||||||

|

|

||||||

[This doesn't cover how the <a

|

|

||||||

href="http://www.sheshbabu.com/posts/rust-module-system/">module system</a>

|

|

||||||

works, however the post I linked there covers this better than I

|

|

||||||

can.](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

## Async/Await

|

|

||||||

|

|

||||||

Async functions may be interrupted to let other things execute as needed. This

|

|

||||||

program uses [tokio](https://tokio.rs/) to handle async tasks. To run an async

|

|

||||||

task and wait for its result, do this:

|

|

||||||

|

|

||||||

```

|

|

||||||

let printer_fact = reqwest::get("https://printerfacts.cetacean.club/fact")

|

|

||||||

.await?

|

|

||||||

.text()

|

|

||||||

.await?;

|

|

||||||

println!("your printer fact is: {}", printer_fact);

|

|

||||||

```

|

|

||||||

|

|

||||||

This will populate `response` with an amusing fact about everyone's favorite

|

|

||||||

household pet, the [printer](https://printerfacts.cetacean.club).

|

|

||||||

|

|

||||||

To make an async function, add the `async` keyword before the `fn` keyword:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

async fn get_text(url: String) -> Result<String> {

|

|

||||||

reqwest::get(&url)

|

|

||||||

.await?

|

|

||||||

.text()

|

|

||||||

.await?

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

This can then be called like this:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

let printer_fact = get_text("https://printerfacts.cetacean.club/fact").await?;

|

|

||||||

```

|

|

||||||

|

|

||||||

## Public/Private Types and Functions

|

|

||||||

|

|

||||||

Rust has three privacy levels for functions:

|

|

||||||

|

|

||||||

- Only visible to the current file (no keyword, lowercase in Go)

|

|

||||||

- Visible to anything in the current crate (`pub(crate)`, internal packages in

|

|

||||||

go)

|

|

||||||

- Visible to everyone (`pub`, upper case in Go)

|

|

||||||

|

|

||||||

[You can't get a perfect analog to `pub(crate)` in Go, but <a

|

|

||||||

href="https://docs.google.com/document/d/1e8kOo3r51b2BWtTs_1uADIA5djfXhPT36s6eHVRIvaU/edit">internal

|

|

||||||

packages</a> can get close to this behavior. Additionally you can have a lot

|

|

||||||

more control over access levels than this, see <a

|

|

||||||

href="https://doc.rust-lang.org/nightly/reference/visibility-and-privacy.html">here</a>

|

|

||||||

for more information.](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

## Structures

|

|

||||||

|

|

||||||

Rust structures are created using the `struct` keyword:

|

|

||||||

|

|

||||||

```go

|

|

||||||

type Client struct {

|

|

||||||

Token string

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

```rust

|

|

||||||

pub struct Client {

|

|

||||||

pub token: String,

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

If the `pub` keyword is not specified before a member name, it will not be

|

|

||||||

usable outside the Rust source code file it is defined in:

|

|

||||||

|

|

||||||

```go

|

|

||||||

type Client struct {

|

|

||||||

token string

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

```rust

|

|

||||||

pub(crate) struct Client {

|

|

||||||

token: String,

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

### Encoding structs to JSON

|

|

||||||

|

|

||||||

[serde](https://serde.rs) is used to convert structures to json. The Rust

|

|

||||||

compiler's

|

|

||||||

[derive](https://doc.rust-lang.org/stable/rust-by-example/trait/derive.html)

|

|

||||||

feature is used to automatically implement the conversion logic.

|

|

||||||

|

|

||||||

```go

|

|

||||||

type Response struct {

|

|

||||||

Name string `json:"name"`

|

|

||||||

Description *string `json:"description,omitempty"`

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

```rust

|

|

||||||

use serde::{Serialize, Deserialize};

|

|

||||||

|

|

||||||

#[derive(Serialize, Deserialize, Debug)]

|

|

||||||

pub(crate) struct Response {

|

|

||||||

pub name: String,

|

|

||||||

pub description: Option<String>,

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

## Strings

|

|

||||||

|

|

||||||

Rust has a few string types that do different things. You can read more about

|

|

||||||

this [here](https://fasterthanli.me/blog/2020/working-with-strings-in-rust/),

|

|

||||||

but at a high level most projects only uses a few of them:

|

|

||||||

|

|

||||||

- `&str`, a slice reference to a String owned by someone else

|

|

||||||

- String, an owned UTF-8 string

|

|

||||||

- PathBuf, a filepath string (encoded in whatever encoding the OS running this

|

|

||||||

code uses for filesystems)

|

|

||||||

|

|

||||||

The strings are different types for safety reasons. See the linked blogpost for

|

|

||||||

more detail about this.

|

|

||||||

|

|

||||||

## Enumerations / Tagged Unions

|

|

||||||

|

|

||||||

Enumerations, also known as tagged unions, are a way to specify a superposition

|

|

||||||

of one of a few different kinds of values in one type. A neat way to show them

|

|

||||||

off (along with some other fancy features like the derivation system) is with the

|

|

||||||

[structopt](https://docs.rs/structopt/0.3.14/structopt/) crate. There is no easy

|

|

||||||

analog for this in Go.

|

|

||||||

|

|

||||||

[We've actually been dealing with enumerations ever since we touched the Result

|

|

||||||

type earlier. <a

|

|

||||||

href="https://doc.rust-lang.org/std/result/enum.Result.html">Result</a> and <a

|

|

||||||

href="https://doc.rust-lang.org/std/option/enum.Option.html">Option</a> are

|

|

||||||

implemented with enumerations.](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

```rust

|

|

||||||

#[derive(StructOpt, Debug)]

|

|

||||||

#[structopt(about = "A simple release management tool")]

|

|

||||||

pub(crate) enum Cmd {

|

|

||||||

/// Creates a new release for a git repo

|

|

||||||

Cut {

|

|

||||||

#[structopt(flatten)]

|

|

||||||

common: Common,

|

|

||||||

/// Changelog location

|

|

||||||

#[structopt(long, short, default_value="./CHANGELOG.md")]

|

|

||||||

changelog: PathBuf,

|

|

||||||

},

|

|

||||||

|

|

||||||

/// Runs releases as triggered by GitHub Actions

|

|

||||||

GitHubAction {

|

|

||||||

#[structopt(flatten)]

|

|

||||||

gha: GitHubAction,

|

|

||||||

},

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

Enum variants can be matched using the `match` keyword:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

match cmd {

|

|

||||||

Cmd::Cut { common, changelog } => {

|

|

||||||

cmd::cut::run(common, changelog).await

|

|

||||||

}

|

|

||||||

Cmd::GitHubAction { gha } => {

|

|

||||||

cmd::github_action::run(gha).await

|

|

||||||

}

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

All variants of an enum must be matched in order for the code to compile.

|

|

||||||

|

|

||||||

[This code was borrowed from <a

|

|

||||||

href="https://github.com/lightspeed/palisade">palisade</a> in order to

|

|

||||||

demonstrate this better. If you want to see these patterns in action, check this

|

|

||||||

repository out!](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

## Testing

|

|

||||||

|

|

||||||

Test functions need to be marked with the `#[test]` annotation, then they will

|

|

||||||

be run alongside `cargo test`:

|

|

||||||

|

|

||||||

```rust

|

|

||||||

mod tests { // not required but it is good practice

|

|

||||||

#[test]

|

|

||||||

fn math_works() {

|

|

||||||

assert_eq!(2 + 2, 4);

|

|

||||||

}

|

|

||||||

|

|

||||||

#[tokio::test] // needs tokio as a dependency

|

|

||||||

async fn http_works() {

|

|

||||||

let _ = get_html("https://within.website").await.unwrap();

|

|

||||||

}

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

Avoid the use of `unwrap()` outside of tests. In the wrong cases, using

|

|

||||||

`unwrap()` in production code can cause the server to crash and can incur data

|

|

||||||

loss.

|

|

||||||

|

|

||||||

[Alternatively, you can also use the <a href="https://learning-rust.github.io/docs/e4.unwrap_and_expect.html#expect">`.expect()`</a> method instead

|

|

||||||

of `.unwrap()`. This lets you attach a message that will be shown when the

|

|

||||||

result isn't Ok.](conversation://Mara/hacker)

|

|

||||||

|

|

||||||

---

|

|

||||||

|

|

||||||

This is by no means comprehensive, see the rust book or [Learn X in Y Minutes

|

|

||||||

Where X = Rust](https://learnxinyminutes.com/docs/rust/) for more information.

|

|

||||||

This code is written to be as boring and obvious as possible. If things don't

|

|

||||||

make sense, please reach out and don't be afraid to ask questions.

|

|

||||||

|

|

@ -23,13 +23,13 @@ This is a surprisingly hard question to answer. Most of the time though, I know

|

||||||

|

|

||||||

Art doesn't have to follow conventional ideas of what most people think "art" is. Art can be just about anything that you can classify as art. As a conventional example, consider something like the Mona Lisa:

|

Art doesn't have to follow conventional ideas of what most people think "art" is. Art can be just about anything that you can classify as art. As a conventional example, consider something like the Mona Lisa:

|

||||||

|

|

||||||

|

<center>  </center>

|

||||||

|

|

||||||

People will accept this as art without much argument. It's a painting, it obviously took a lot of skill and time to create. It is said that Leonardo Da Vinci (the artist of the painting) created it partially [as a contribution to the state of the art of oil painting][monalisawhy].

|

People will accept this as art without much argument. It's a painting, it obviously took a lot of skill and time to create. It is said that Leonardo Da Vinci (the artist of the painting) created it partially [as a contribution to the state of the art of oil painting][monalisawhy].

|

||||||

|

|

||||||

So that painting is art, and a lot of people would consider it art; so what *would* a lot of people *not* consider art? Here's an example:

|

So that painting is art, and a lot of people would consider it art; so what *would* a lot of people *not* consider art? Here's an example:

|

||||||

|

|

||||||

|

<center>  </center>

|

||||||

|

|

||||||

This is *Untitled (Perfect Lovers)* by Felix Gonzalez. If you just take a look at it without context, it's just two battery-operated clocks on a wall. Where is the expertise and the like that goes into this? This is just the result of someone buying two clocks from the store and putting them somewhere, right?

|

This is *Untitled (Perfect Lovers)* by Felix Gonzalez. If you just take a look at it without context, it's just two battery-operated clocks on a wall. Where is the expertise and the like that goes into this? This is just the result of someone buying two clocks from the store and putting them somewhere, right?

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -1,229 +0,0 @@

|

||||||

---

|

|

||||||

title: "</kubernetes>"

|

|

||||||

date: 2021-01-03

|

|

||||||

---

|

|

||||||

|

|

||||||

# </kubernetes>

|

|

||||||

|

|

||||||

Well, since I posted [that last post](/blog/k8s-pondering-2020-12-31) I have had

|

|

||||||

an adventure. A good friend pointed out a server host that I had missed when I

|

|

||||||

was looking for other places to use, and now I have migrated my blog to this new

|

|

||||||

server. As of yesterday, I now run my website on a dedicated server in Finland.

|

|

||||||

Here is the story of my journey to migrate 6 years of cruft and technical debt

|

|

||||||

to this new server.

|

|

||||||

|

|

||||||

Let's talk about this goliath of a server. This server is an AX41 from Hetzner.

|

|

||||||

It has 64 GB of ram, a 512 GB nvme drive, 3 2 TB drives, and a Ryzen 3600. For

|

|

||||||

all practical concerns, this beast is beyond overkill and rivals my workstation

|

|

||||||

tower in everything but the GPU power. I have named it `lufta`, which is the

|

|

||||||

word for feather in [L'ewa](https://lewa.within.website/dictionary.html).

|

|

||||||

|

|

||||||

## Assimilation

|

|

||||||

|

|

||||||

For my server setup process, the first step it to assimilate it. In this step I

|

|

||||||

get a base NixOS install on it somehow. Since I was using Hetzner, I was able to

|

|

||||||

boot into a NixOS install image using the process documented

|

|

||||||

[here](https://nixos.wiki/wiki/Install_NixOS_on_Hetzner_Online). Then I decided

|

|

||||||

that it would also be cool to have this server use

|

|

||||||

[zfs](https://en.wikipedia.org/wiki/ZFS) as its filesystem to take advantage of

|

|

||||||

its legendary subvolume and snapshotting features.

|

|

||||||

|

|

||||||

So I wrote up a bootstrap system definition like the Hetzner tutorial said and

|

|

||||||

ended up with `hosts/lufta/bootstrap.nix`:

|

|

||||||

|

|

||||||

```nix

|

|

||||||

{ pkgs, ... }:

|

|

||||||

|

|

||||||

{

|

|

||||||

services.openssh.enable = true;

|

|

||||||

users.users.root.openssh.authorizedKeys.keys = [

|

|

||||||

"ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIPg9gYKVglnO2HQodSJt4z4mNrUSUiyJQ7b+J798bwD9 cadey@shachi"

|

|

||||||

];

|

|

||||||

|

|

||||||

networking.usePredictableInterfaceNames = false;

|

|

||||||

systemd.network = {

|

|

||||||

enable = true;

|

|

||||||

networks."eth0".extraConfig = ''

|

|

||||||

[Match]

|

|

||||||

Name = eth0

|

|

||||||

[Network]

|

|

||||||

# Add your own assigned ipv6 subnet here here!

|

|

||||||

Address = 2a01:4f9:3a:1a1c::/64

|

|

||||||

Gateway = fe80::1

|

|

||||||

# optionally you can do the same for ipv4 and disable DHCP (networking.dhcpcd.enable = false;)

|

|

||||||

Address = 135.181.162.99/26

|

|

||||||

Gateway = 135.181.162.65

|

|

||||||

'';

|

|

||||||

};

|

|

||||||

|

|

||||||

boot.supportedFilesystems = [ "zfs" ];

|

|

||||||

|

|

||||||

environment.systemPackages = with pkgs; [ wget vim zfs ];

|

|

||||||

}

|

|

||||||

```

|

|

||||||

|

|

||||||

Then I fired up the kexec tarball and waited for the server to boot into a NixOS

|

|

||||||

live environment. A few minutes later I was in. I started formatting the drives

|

|

||||||

according to the [NixOS install

|

|

||||||

guide](https://nixos.org/manual/nixos/stable/index.html#sec-installation) with

|

|

||||||

one major difference: I added a `/boot` ext4 partition on the SSD. This allows

|

|

||||||

me to have the system root device on zfs. I added the disks to a `raidz1` pool

|

|

||||||

and created a few volumes. I also added the SSD as a log device so I get SSD

|

|

||||||

caching.

|

|

||||||

|

|

||||||

From there I installed NixOS as normal and rebooted the server. It booted

|

|

||||||

normally. I had a shiny new NixOS server in the cloud! I noticed that the server

|

|

||||||

had booted into NixOS unstable as opposed to NixOS 20.09 like my other nodes. I

|

|

||||||

thought "ah, well, that probably isn't a problem" and continued to the

|

|

||||||

configuration step.

|

|

||||||

|

|

||||||

[That's ominous...](conversation://Mara/hmm)

|

|

||||||

|

|

||||||

## Configuration

|

|

||||||

|

|

||||||

Now that the server was assimilated and I could SSH into it, the next step was

|

|

||||||

to configure it to run my services. While I was waiting for Hetzner to provision

|

|

||||||

my server I ported a bunch of my services over to Nixops services [a-la this

|

|

||||||

post](/blog/nixops-services-2020-11-09) in [this

|

|

||||||

folder](https://github.com/Xe/nixos-configs/tree/master/common/services) of my

|

|

||||||

configs repo.

|

|

||||||

|

|

||||||

Now that I had them, it was time to add this server to my Nixops setup. So I

|

|

||||||

opened the [nixops definition

|

|

||||||

folder](https://github.com/Xe/nixos-configs/tree/master/nixops/hexagone) and

|

|

||||||

added the metadata for `lufta`. Then I added it to my Nixops deployment with

|

|

||||||

this command:

|

|

||||||

|

|

||||||

```console

|

|

||||||

$ nixops modify -d hexagone -n hexagone *.nix

|

|

||||||

```

|

|

||||||

|

|

||||||

Then I copied over the autogenerated config from `lufta`'s `/etc/nixos/` folder

|

|

||||||

into

|

|

||||||

[`hosts/lufta`](https://github.com/Xe/nixos-configs/tree/master/hosts/lufta) and

|

|

||||||

ran a `nixops deploy` to add some other base configuration.

|

|

||||||

|

|

||||||

## Migration

|

|

||||||

|

|

||||||

Once that was done, I started enabling my services and pushing configs to test

|

|

||||||

them. After I got to a point where I thought things would work I opened up the

|

|

||||||

Kubernetes console and started deleting deployments on my kubernetes cluster as

|

|

||||||

I felt "safe" to migrate them over. Then I saw the deployments come back. I

|

|

||||||

deleted them again and they came back again.

|

|

||||||

|

|

||||||

Oh, right. I enabled that one Kubernetes service that made it intentionally hard

|

|

||||||

to delete deployments. One clever set of scale-downs and kills later and I was

|

|

||||||

able to kill things with wild abandon.

|

|

||||||

|

|

||||||

I copied over the gitea data with `rsync` running in the kubernetes deployment.

|

|

||||||

Then I killed the gitea deployment, updated DNS and reran a whole bunch of gitea

|

|

||||||

jobs to resanify the environment. I did a test clone on a few of my repos and

|

|

||||||

then I deleted the gitea volume from DigitalOcean.

|

|

||||||

|

|

||||||

Moving over the other deployments from Kubernetes into NixOS services was

|

|

||||||

somewhat easy, however I did need to repackage a bunch of my programs and static

|

|

||||||

sites for NixOS. I made the

|

|