Compare commits

85 Commits

| Author | SHA1 | Date |

|---|---|---|

|

|

990cce7267 | |

|

|

6456d75502 | |

|

|

1d95a5c073 | |

|

|

49f4ba9847 | |

|

|

3dba1d98f8 | |

|

|

90332b323d | |

|

|

444eee96b0 | |

|

|

b40cb9aa78 | |

|

|

8b2b647257 | |

|

|

dc48c5e5dc | |

|

|

0f5c06fa44 | |

|

|

1b91c59d59 | |

|

|

bc71c3c278 | |

|

|

4bcc848bb1 | |

|

|

17af42bc69 | |

|

|

1ffc4212d6 | |

|

|

811995223c | |

|

|

585d39ea62 | |

|

|

201abedb14 | |

|

|

66233bcd40 | |

|

|

d2455aa1c1 | |

|

|

a359f54a91 | |

|

|

1bd858680d | |

|

|

49a4d7cbea | |

|

|

0c6d16cba8 | |

|

|

09c726a0c9 | |

|

|

a22df5f544 | |

|

|

1ae1cc2945 | |

|

|

951542ccf2 | |

|

|

d63f393193 | |

|

|

6788a5510b | |

|

|

9c5250d10a | |

|

|

474fd908bc | |

|

|

43057536ad | |

|

|

2389af7ee5 | |

|

|

2b7a64e57d | |

|

|

b1cb704fa4 | |

|

|

695ebccd40 | |

|

|

0e33b75b26 | |

|

|

d3e94ad834 | |

|

|

9e4566ba67 | |

|

|

276023d371 | |

|

|

ccdee6431d | |

|

|

098b7183e7 | |

|

|

8b92d8d8ee | |

|

|

9a9c474c76 | |

|

|

c5fc9336f5 | |

|

|

99197f4843 | |

|

|

233ea76204 | |

|

|

d35f62351f | |

|

|

7c7981bf70 | |

|

|

a2b1a4afbf | |

|

|

23c181ee72 | |

|

|

b0ae633c0c | |

|

|

a6eadb1051 | |

|

|

f5a86eafb8 | |

|

|

2dde44763d | |

|

|

92d812f74e | |

|

|

9371e7f848 | |

|

|

f8ae558738 | |

|

|

e0a1744989 | |

|

|

7f97bf7ed4 | |

|

|

5ff4af60f8 | |

|

|

7ac6c03341 | |

|

|

8d74418089 | |

|

|

f643496416 | |

|

|

089b14788d | |

|

|

d2deb4470c | |

|

|

895554a593 | |

|

|

580cac815e | |

|

|

dbccb14b48 | |

|

|

ec78ddfdd7 | |

|

|

3b63ce9f26 | |

|

|

e4200399e1 | |

|

|

2793da4845 | |

|

|

ea94e52dd3 | |

|

|

0d3655e726 | |

|

|

0b27a424ea | |

|

|

5bd164fd33 | |

|

|

7e6272bb76 | |

|

|

683a3ec5e6 | |

|

|

03eea22894 | |

|

|

d32a038572 | |

|

|

27c8145da3 | |

|

|

116bc13b6b |

|

|

@ -11,31 +11,8 @@ jobs:

|

||||||

runs-on: ubuntu-latest

|

runs-on: ubuntu-latest

|

||||||

steps:

|

steps:

|

||||||

- uses: actions/checkout@v1

|

- uses: actions/checkout@v1

|

||||||

- uses: cachix/install-nix-action@v6

|

- uses: cachix/install-nix-action@v12

|

||||||

- uses: cachix/cachix-action@v3

|

- uses: cachix/cachix-action@v7

|

||||||

with:

|

with:

|

||||||

name: xe

|

name: xe

|

||||||

- name: Log into GitHub Container Registry

|

- run: nix build --no-link

|

||||||

run: echo "${{ secrets.CR_PAT }}" | docker login https://ghcr.io -u ${{ github.actor }} --password-stdin

|

|

||||||

- name: Docker push

|

|

||||||

run: |

|

|

||||||

docker load -i result

|

|

||||||

docker tag xena/christinewebsite:latest ghcr.io/xe/site:$GITHUB_SHA

|

|

||||||

docker push ghcr.io/xe/site

|

|

||||||

release:

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

needs: docker-build

|

|

||||||

if: github.ref == 'refs/heads/main'

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v1

|

|

||||||

- uses: cachix/install-nix-action@v6

|

|

||||||

- name: deploy

|

|

||||||

run: ./scripts/release.sh

|

|

||||||

env:

|

|

||||||

DIGITALOCEAN_ACCESS_TOKEN: ${{ secrets.DIGITALOCEAN_TOKEN }}

|

|

||||||

MI_TOKEN: ${{ secrets.MI_TOKEN }}

|

|

||||||

PATREON_ACCESS_TOKEN: ${{ secrets.PATREON_ACCESS_TOKEN }}

|

|

||||||

PATREON_CLIENT_ID: ${{ secrets.PATREON_CLIENT_ID }}

|

|

||||||

PATREON_CLIENT_SECRET: ${{ secrets.PATREON_CLIENT_SECRET }}

|

|

||||||

PATREON_REFRESH_TOKEN: ${{ secrets.PATREON_REFRESH_TOKEN }}

|

|

||||||

DHALL_PRELUDE: https://raw.githubusercontent.com/dhall-lang/dhall-lang/v17.0.0/Prelude/package.dhall

|

|

||||||

|

|

|

||||||

|

|

@ -1,35 +0,0 @@

|

||||||

name: Rust

|

|

||||||

on:

|

|

||||||

push:

|

|

||||||

branches: [ main ]

|

|

||||||

pull_request:

|

|

||||||

branches: [ main ]

|

|

||||||

env:

|

|

||||||

CARGO_TERM_COLOR: always

|

|

||||||

jobs:

|

|

||||||

build:

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v2

|

|

||||||

- name: Build

|

|

||||||

run: cargo build --all

|

|

||||||

- name: Run tests

|

|

||||||

run: |

|

|

||||||

cargo test

|

|

||||||

(cd lib/jsonfeed && cargo test)

|

|

||||||

(cd lib/patreon && cargo test)

|

|

||||||

env:

|

|

||||||

PATREON_ACCESS_TOKEN: ${{ secrets.PATREON_ACCESS_TOKEN }}

|

|

||||||

PATREON_CLIENT_ID: ${{ secrets.PATREON_CLIENT_ID }}

|

|

||||||

PATREON_CLIENT_SECRET: ${{ secrets.PATREON_CLIENT_SECRET }}

|

|

||||||

PATREON_REFRESH_TOKEN: ${{ secrets.PATREON_REFRESH_TOKEN }}

|

|

||||||

release:

|

|

||||||

runs-on: ubuntu-latest

|

|

||||||

if: github.ref == 'refs/heads/main'

|

|

||||||

steps:

|

|

||||||

- uses: actions/checkout@v2

|

|

||||||

- name: Releases via Palisade

|

|

||||||

run: |

|

|

||||||

docker run --rm --name palisade -v $(pwd):/workspace -e GITHUB_TOKEN -e GITHUB_REF -e GITHUB_REPOSITORY --workdir /workspace ghcr.io/xe/palisade palisade github-action

|

|

||||||

env:

|

|

||||||

GITHUB_TOKEN: ${{ secrets.CR_PAT }}

|

|

||||||

File diff suppressed because it is too large

Load Diff

26

Cargo.toml

26

Cargo.toml

|

|

@ -1,6 +1,6 @@

|

||||||

[package]

|

[package]

|

||||||

name = "xesite"

|

name = "xesite"

|

||||||

version = "2.1.0"

|

version = "2.2.0"

|

||||||

authors = ["Christine Dodrill <me@christine.website>"]

|

authors = ["Christine Dodrill <me@christine.website>"]

|

||||||

edition = "2018"

|

edition = "2018"

|

||||||

build = "src/build.rs"

|

build = "src/build.rs"

|

||||||

|

|

@ -11,47 +11,49 @@ repository = "https://github.com/Xe/site"

|

||||||

[dependencies]

|

[dependencies]

|

||||||

color-eyre = "0.5"

|

color-eyre = "0.5"

|

||||||

chrono = "0.4"

|

chrono = "0.4"

|

||||||

comrak = "0.8"

|

comrak = "0.9"

|

||||||

envy = "0.4"

|

envy = "0.4"

|

||||||

glob = "0.3"

|

glob = "0.3"

|

||||||

hyper = "0.13"

|

hyper = "0.14"

|

||||||

kankyo = "0.3"

|

kankyo = "0.3"

|

||||||

lazy_static = "1.4"

|

lazy_static = "1.4"

|

||||||

log = "0.4"

|

log = "0.4"

|

||||||

mime = "0.3.0"

|

mime = "0.3.0"

|

||||||

prometheus = { version = "0.10", default-features = false, features = ["process"] }

|

prometheus = { version = "0.11", default-features = false, features = ["process"] }

|

||||||

rand = "0"

|

rand = "0"

|

||||||

serde_dhall = "0.7.1"

|

reqwest = { version = "0.11", features = ["json"] }

|

||||||

|

sdnotify = { version = "0.1", default-features = false }

|

||||||

|

serde_dhall = "0.9.0"

|

||||||

serde = { version = "1", features = ["derive"] }

|

serde = { version = "1", features = ["derive"] }

|

||||||

serde_yaml = "0.8"

|

serde_yaml = "0.8"

|

||||||

sitemap = "0.4"

|

sitemap = "0.4"

|

||||||

thiserror = "1"

|

thiserror = "1"

|

||||||

tokio = { version = "0.2", features = ["macros"] }

|

tokio = { version = "1", features = ["full"] }

|

||||||

tracing = "0.1"

|

tracing = "0.1"

|

||||||

tracing-futures = "0.2"

|

tracing-futures = "0.2"

|

||||||

tracing-subscriber = { version = "0.2", features = ["fmt"] }

|

tracing-subscriber = { version = "0.2", features = ["fmt"] }

|

||||||

warp = "0.2"

|

warp = "0.3"

|

||||||

xml-rs = "0.8"

|

xml-rs = "0.8"

|

||||||

url = "2"

|

url = "2"

|

||||||

|

uuid = { version = "0.8", features = ["serde", "v4"] }

|

||||||

|

|

||||||

# workspace dependencies

|

# workspace dependencies

|

||||||

|

cfcache = { path = "./lib/cfcache" }

|

||||||

go_vanity = { path = "./lib/go_vanity" }

|

go_vanity = { path = "./lib/go_vanity" }

|

||||||

jsonfeed = { path = "./lib/jsonfeed" }

|

jsonfeed = { path = "./lib/jsonfeed" }

|

||||||

|

mi = { path = "./lib/mi" }

|

||||||

patreon = { path = "./lib/patreon" }

|

patreon = { path = "./lib/patreon" }

|

||||||

|

|

||||||

[build-dependencies]

|

[build-dependencies]

|

||||||

ructe = { version = "0.12", features = ["warp02"] }

|

ructe = { version = "0.13", features = ["warp02"] }

|

||||||

|

|

||||||

[dev-dependencies]

|

[dev-dependencies]

|

||||||

pfacts = "0"

|

pfacts = "0"

|

||||||

serde_json = "1"

|

serde_json = "1"

|

||||||

eyre = "0.6"

|

eyre = "0.6"

|

||||||

reqwest = { version = "0.10", features = ["json"] }

|

|

||||||

pretty_env_logger = "0"

|

pretty_env_logger = "0"

|

||||||

|

|

||||||

[workspace]

|

[workspace]

|

||||||

members = [

|

members = [

|

||||||

"./lib/go_vanity",

|

"./lib/*",

|

||||||

"./lib/jsonfeed",

|

|

||||||

"./lib/patreon"

|

|

||||||

]

|

]

|

||||||

|

|

|

||||||

4

LICENSE

4

LICENSE

|

|

@ -1,4 +1,4 @@

|

||||||

Copyright (c) 2017-2020 Christine Dodrill <me@christine.website>

|

Copyright (c) 2017-2021 Christine Dodrill <me@christine.website>

|

||||||

|

|

||||||

This software is provided 'as-is', without any express or implied

|

This software is provided 'as-is', without any express or implied

|

||||||

warranty. In no event will the authors be held liable for any damages

|

warranty. In no event will the authors be held liable for any damages

|

||||||

|

|

@ -16,4 +16,4 @@ freely, subject to the following restrictions:

|

||||||

2. Altered source versions must be plainly marked as such, and must not be

|

2. Altered source versions must be plainly marked as such, and must not be

|

||||||

misrepresented as being the original software.

|

misrepresented as being the original software.

|

||||||

|

|

||||||

3. This notice may not be removed or altered from any source distribution.

|

3. This notice may not be removed or altered from any source distribution.

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,177 @@

|

||||||

|

---

|

||||||

|

title: The 7th Edition

|

||||||

|

date: 2020-12-19

|

||||||

|

tags:

|

||||||

|

- ttrpg

|

||||||

|

---

|

||||||

|

|

||||||

|

# The 7th Edition

|

||||||

|

|

||||||

|

You know what, fuck rules. Fuck systems. Fuck limitations. Let's dial the

|

||||||

|

tabletop RPG system down to its roots. Let's throw out every stat but one:

|

||||||

|

Awesomeness. When you try to do something that could fail, roll for Awesomeness.

|

||||||

|

If your roll is more than your awesomeness stat, you win. If not, you lose. If

|

||||||

|

you are or have something that would benefit you in that situation, roll for

|

||||||

|

awesomeness twice and take the higher value.

|

||||||

|

|

||||||

|

No stats.<br />

|

||||||

|

No counts.<br />

|

||||||

|

No limits.<br />

|

||||||

|

No gods.<br />

|

||||||

|

No masters.<br />

|

||||||

|

Just you and me and nature in the battlefield.

|

||||||

|

|

||||||

|

* Want to shoot an arrow? Roll for awesomeness. You failed? You're out of ammo.

|

||||||

|

* Want to, defeat a goblin but you have a goblin-slaying-broadsword? Roll twice

|

||||||

|

for awesomeness and take the higher value. You got a 20? That goblin was

|

||||||

|

obliterated. Good job.

|

||||||

|

* Want to pick up an item into your inventory? Roll for awesomeness. You got it?

|

||||||

|

It's in your inventory.

|

||||||

|

|

||||||

|

Etc. Don't think too hard. Let a roll of the dice decide if you are unsure.

|

||||||

|

|

||||||

|

## Base Awesomeness Stats

|

||||||

|

|

||||||

|

Here are some probably balanced awesomeness base stats depending on what kind of

|

||||||

|

dice you are using:

|

||||||

|

|

||||||

|

* 6-sided: 4 or 5

|

||||||

|

* 8-sided: 5 or 6

|

||||||

|

* 10-sided: 6 or 7

|

||||||

|

* 12-sided: 7 or 8

|

||||||

|

* 20-sided: anywhere from 11-13

|

||||||

|

|

||||||

|

## Character Sheet Template

|

||||||

|

|

||||||

|

Here's an example character sheet:

|

||||||

|

|

||||||

|

```

|

||||||

|

Name:

|

||||||

|

Awesomeness:

|

||||||

|

Race:

|

||||||

|

Class:

|

||||||

|

Inventory:

|

||||||

|

*

|

||||||

|

```

|

||||||

|

|

||||||

|

That's it. You don't even need the race or class if you don't want to have it.

|

||||||

|

|

||||||

|

You can add more if you feel it is relevant for your character. If your

|

||||||

|

character is a street brat that has experience with haggling, then fuck it be

|

||||||

|

the most street brattiest haggler you can. Try to not overload your sheet with

|

||||||

|

information, this game is supposed to be simple. A sentence or two at most is

|

||||||

|

good.

|

||||||

|

|

||||||

|

## One Player is The World

|

||||||

|

|

||||||

|

The World is a character that other systems would call the Narrator, the

|

||||||

|

Pathfinder, Dungeon Master or similar. Let's strip this down to the core of the

|

||||||

|

matter. One player doesn't just dictate the world, they _are_ the world.

|

||||||

|

|

||||||

|

The World also controls the monsters and non-player characters. In general, if

|

||||||

|

you are in doubt as to who should roll for an event, The World does that roll.

|

||||||

|

|

||||||

|

## Mixins/Mods

|

||||||

|

|

||||||

|

These are things you can do to make the base game even more tailored to your

|

||||||

|

group. Whether you should do this is highly variable to the needs and whims of

|

||||||

|

your group in particular.

|

||||||

|

|

||||||

|

### Mixin: Adjustable Awesomeness

|

||||||

|

|

||||||

|

So, one problem that could come up with this is that bad luck could make this

|

||||||

|

not as fun. As a result, add these two rules in:

|

||||||

|

|

||||||

|

* Every time you roll above your awesomeness, add 1 to your awesomeness stat

|

||||||

|

* Every time you roll below your awesomeness, remove 1 from your awesomeness

|

||||||

|

stat

|

||||||

|

|

||||||

|

This should add up so that luck would even out over time. Players that have less

|

||||||

|

luck than usual will eventually get their awesomeness evened out so that luck

|

||||||

|

will be in their favor.

|

||||||

|

|

||||||

|

### Mixin: No Awesomeness

|

||||||

|

|

||||||

|

In this mod, rip out Awesomeness altogether. When two parties are at odds, they

|

||||||

|

both roll dice. The one that rolls higher gets what they want. If they tie, both

|

||||||

|

people get a little part of what they want. For extra fun do this with six-sided

|

||||||

|

dice.

|

||||||

|

|

||||||

|

* Monster wants to attack a player? The World and that player roll. If the

|

||||||

|

player wins, they can choose to counterattack. If the monster wins, they do a

|

||||||

|

wound or something.

|

||||||

|

* One player wants to steal from another? Have them both roll to see what

|

||||||

|

happens.

|

||||||

|

|

||||||

|

Use your imagination! Ask others if you are unsure!

|

||||||

|

|

||||||

|

## Other Advice

|

||||||

|

|

||||||

|

This is not essential but it may help.

|

||||||

|

|

||||||

|

### Monster Building

|

||||||

|

|

||||||

|

Okay so basically monsters fall into two categories: peons and bosses. Peons

|

||||||

|

should be easy to defeat, usually requiring one action. Bosses may require more

|

||||||

|

and might require more than pure damage to defeat. Get clever. Maybe require the

|

||||||

|

players to drop a chandelier on the boss. Use the environment.

|

||||||

|

|

||||||

|

In general, peons should have a very high base awesomeness in order to do things

|

||||||

|

they want. Bosses can vary based on your mood.

|

||||||

|

|

||||||

|

Adjustable awesomeness should affect monsters too.

|

||||||

|

|

||||||

|

### Worldbuilding

|

||||||

|

|

||||||

|

Take a setting from somewhere and roll with it. You want to do a cyberpunk jaunt

|

||||||

|

in Night City with a sword-wielding warlock, a succubus space marine, a bard

|

||||||

|

netrunner and a shapeshifting monk? Do the hell out of that. That sounds

|

||||||

|

awesome.

|

||||||

|

|

||||||

|

Don't worry about accuracy or the like. You are setting out to have fun.

|

||||||

|

|

||||||

|

## Special Thanks

|

||||||

|

|

||||||

|

Special thanks goes to Jared, who sent out this [tweet][1] that inspired this

|

||||||

|

document. In case the tweet gets deleted, here's what it said:

|

||||||

|

|

||||||

|

[1]: https://twitter.com/infinite_mao/status/1340402360259137541

|

||||||

|

|

||||||

|

> heres a d&d for you

|

||||||

|

|

||||||

|

> you have one stat, its a saving throw. if you need to roll dice, you roll your

|

||||||

|

> save.

|

||||||

|

|

||||||

|

> you have a class and some equipment and junk. if the thing you need to roll

|

||||||

|

> dice for is relevant to your class or equipment or whatever, roll your save

|

||||||

|

> with advantage.

|

||||||

|

|

||||||

|

> oh your Save is 5 or something. if you do something awesome, raise your save

|

||||||

|

> by 1.

|

||||||

|

|

||||||

|

> no hp, save vs death. no damage, save vs goblin. no tracking arrows, save vs

|

||||||

|

> running out of ammo.

|

||||||

|

|

||||||

|

> thanks to @Axes_N_Orcs for this

|

||||||

|

|

||||||

|

> What's So Cool About Save vs Death?

|

||||||

|

|

||||||

|

> can you carry all that treasure and equipment? save vs gains

|

||||||

|

|

||||||

|

I replied:

|

||||||

|

|

||||||

|

> Can you get more minimal than this?

|

||||||

|

|

||||||

|

He replied:

|

||||||

|

|

||||||

|

> when two or more parties are at odds, all roll dice. highest result gets what

|

||||||

|

> they want.

|

||||||

|

|

||||||

|

> hows that?

|

||||||

|

|

||||||

|

This document is really just this twitter exchange in more words so that people

|

||||||

|

less familiar with tabletop games can understand it more easily. You know you

|

||||||

|

have finished when there is nothing left to remove, not when you can add

|

||||||

|

something to "fix" it.

|

||||||

|

|

||||||

|

I might put this on my [itch.io page](https://withinstudios.itch.io/).

|

||||||

|

|

@ -1,8 +1,8 @@

|

||||||

---

|

---

|

||||||

title: "TL;DR Rust"

|

title: "TL;DR Rust"

|

||||||

date: 2020-09-19

|

date: 2020-09-19

|

||||||

|

series: rust

|

||||||

tags:

|

tags:

|

||||||

- rust

|

|

||||||

- go

|

- go

|

||||||

- golang

|

- golang

|

||||||

---

|

---

|

||||||

|

|

|

||||||

|

|

@ -0,0 +1,229 @@

|

||||||

|

---

|

||||||

|

title: "</kubernetes>"

|

||||||

|

date: 2021-01-03

|

||||||

|

---

|

||||||

|

|

||||||

|

# </kubernetes>

|

||||||

|

|

||||||

|

Well, since I posted [that last post](/blog/k8s-pondering-2020-12-31) I have had

|

||||||

|

an adventure. A good friend pointed out a server host that I had missed when I

|

||||||

|

was looking for other places to use, and now I have migrated my blog to this new

|

||||||

|

server. As of yesterday, I now run my website on a dedicated server in Finland.

|

||||||

|

Here is the story of my journey to migrate 6 years of cruft and technical debt

|

||||||

|

to this new server.

|

||||||

|

|

||||||

|

Let's talk about this goliath of a server. This server is an AX41 from Hetzner.

|

||||||

|

It has 64 GB of ram, a 512 GB nvme drive, 3 2 TB drives, and a Ryzen 3600. For

|

||||||

|

all practical concerns, this beast is beyond overkill and rivals my workstation

|

||||||

|

tower in everything but the GPU power. I have named it `lufta`, which is the

|

||||||

|

word for feather in [L'ewa](https://lewa.within.website/dictionary.html).

|

||||||

|

|

||||||

|

## Assimilation

|

||||||

|

|

||||||

|

For my server setup process, the first step it to assimilate it. In this step I

|

||||||

|

get a base NixOS install on it somehow. Since I was using Hetzner, I was able to

|

||||||

|

boot into a NixOS install image using the process documented

|

||||||

|

[here](https://nixos.wiki/wiki/Install_NixOS_on_Hetzner_Online). Then I decided

|

||||||

|

that it would also be cool to have this server use

|

||||||

|

[zfs](https://en.wikipedia.org/wiki/ZFS) as its filesystem to take advantage of

|

||||||

|

its legendary subvolume and snapshotting features.

|

||||||

|

|

||||||

|

So I wrote up a bootstrap system definition like the Hetzner tutorial said and

|

||||||

|

ended up with `hosts/lufta/bootstrap.nix`:

|

||||||

|

|

||||||

|

```nix

|

||||||

|

{ pkgs, ... }:

|

||||||

|

|

||||||

|

{

|

||||||

|

services.openssh.enable = true;

|

||||||

|

users.users.root.openssh.authorizedKeys.keys = [

|

||||||

|

"ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIPg9gYKVglnO2HQodSJt4z4mNrUSUiyJQ7b+J798bwD9 cadey@shachi"

|

||||||

|

];

|

||||||

|

|

||||||

|

networking.usePredictableInterfaceNames = false;

|

||||||

|

systemd.network = {

|

||||||

|

enable = true;

|

||||||

|

networks."eth0".extraConfig = ''

|

||||||

|

[Match]

|

||||||

|

Name = eth0

|

||||||

|

[Network]

|

||||||

|

# Add your own assigned ipv6 subnet here here!

|

||||||

|

Address = 2a01:4f9:3a:1a1c::/64

|

||||||

|

Gateway = fe80::1

|

||||||

|

# optionally you can do the same for ipv4 and disable DHCP (networking.dhcpcd.enable = false;)

|

||||||

|

Address = 135.181.162.99/26

|

||||||

|

Gateway = 135.181.162.65

|

||||||

|

'';

|

||||||

|

};

|

||||||

|

|

||||||

|

boot.supportedFilesystems = [ "zfs" ];

|

||||||

|

|

||||||

|

environment.systemPackages = with pkgs; [ wget vim zfs ];

|

||||||

|

}

|

||||||

|

```

|

||||||

|

|

||||||

|

Then I fired up the kexec tarball and waited for the server to boot into a NixOS

|

||||||

|

live environment. A few minutes later I was in. I started formatting the drives

|

||||||

|

according to the [NixOS install

|

||||||

|

guide](https://nixos.org/manual/nixos/stable/index.html#sec-installation) with

|

||||||

|

one major difference: I added a `/boot` ext4 partition on the SSD. This allows

|

||||||

|

me to have the system root device on zfs. I added the disks to a `raidz1` pool

|

||||||

|

and created a few volumes. I also added the SSD as a log device so I get SSD

|

||||||

|

caching.

|

||||||

|

|

||||||

|

From there I installed NixOS as normal and rebooted the server. It booted

|

||||||

|

normally. I had a shiny new NixOS server in the cloud! I noticed that the server

|

||||||

|

had booted into NixOS unstable as opposed to NixOS 20.09 like my other nodes. I

|

||||||

|

thought "ah, well, that probably isn't a problem" and continued to the

|

||||||

|

configuration step.

|

||||||

|

|

||||||

|

[That's ominous...](conversation://Mara/hmm)

|

||||||

|

|

||||||

|

## Configuration

|

||||||

|

|

||||||

|

Now that the server was assimilated and I could SSH into it, the next step was

|

||||||

|

to configure it to run my services. While I was waiting for Hetzner to provision

|

||||||

|

my server I ported a bunch of my services over to Nixops services [a-la this

|

||||||

|

post](/blog/nixops-services-2020-11-09) in [this

|

||||||

|

folder](https://github.com/Xe/nixos-configs/tree/master/common/services) of my

|

||||||

|

configs repo.

|

||||||

|

|

||||||

|

Now that I had them, it was time to add this server to my Nixops setup. So I

|

||||||

|

opened the [nixops definition

|

||||||

|

folder](https://github.com/Xe/nixos-configs/tree/master/nixops/hexagone) and

|

||||||

|

added the metadata for `lufta`. Then I added it to my Nixops deployment with

|

||||||

|

this command:

|

||||||

|

|

||||||

|

```console

|

||||||

|

$ nixops modify -d hexagone -n hexagone *.nix

|

||||||

|

```

|

||||||

|

|

||||||

|

Then I copied over the autogenerated config from `lufta`'s `/etc/nixos/` folder

|

||||||

|

into

|

||||||

|

[`hosts/lufta`](https://github.com/Xe/nixos-configs/tree/master/hosts/lufta) and

|

||||||

|

ran a `nixops deploy` to add some other base configuration.

|

||||||

|

|

||||||

|

## Migration

|

||||||

|

|

||||||

|

Once that was done, I started enabling my services and pushing configs to test

|

||||||

|

them. After I got to a point where I thought things would work I opened up the

|

||||||

|

Kubernetes console and started deleting deployments on my kubernetes cluster as

|

||||||

|

I felt "safe" to migrate them over. Then I saw the deployments come back. I

|

||||||

|

deleted them again and they came back again.

|

||||||

|

|

||||||

|

Oh, right. I enabled that one Kubernetes service that made it intentionally hard

|

||||||

|

to delete deployments. One clever set of scale-downs and kills later and I was

|

||||||

|

able to kill things with wild abandon.

|

||||||

|

|

||||||

|

I copied over the gitea data with `rsync` running in the kubernetes deployment.

|

||||||

|

Then I killed the gitea deployment, updated DNS and reran a whole bunch of gitea

|

||||||

|

jobs to resanify the environment. I did a test clone on a few of my repos and

|

||||||

|

then I deleted the gitea volume from DigitalOcean.

|

||||||

|

|

||||||

|

Moving over the other deployments from Kubernetes into NixOS services was

|

||||||

|

somewhat easy, however I did need to repackage a bunch of my programs and static

|

||||||

|

sites for NixOS. I made the

|

||||||

|

[`pkgs`](https://github.com/Xe/nixos-configs/tree/master/pkgs) tree a bit more

|

||||||

|

fleshed out to compensate.

|

||||||

|

|

||||||

|

[Okay, packaging static sites in NixOS is beyond overkill, however a lot of them

|

||||||

|

need some annoyingly complicated build steps and throwing it all into Nix means

|

||||||

|

that we can make them reproducible and use one build system to rule them

|

||||||

|

all. Not to mention that when I need to upgrade the system, everything will

|

||||||

|

rebuild with new system libraries to avoid the <a

|

||||||

|

href="https://blog.tidelift.com/bit-rot-the-silent-killer">Docker bitrot

|

||||||

|

problem</a>.](conversation://Mara/hacker)

|

||||||

|

|

||||||

|

## Reboot Test

|

||||||

|

|

||||||

|

After a significant portion of the services were moved over, I decided it was

|

||||||

|

time to do the reboot test. I ran the `reboot` command and then...nothing.

|

||||||

|

My continuous ping test was timing out. My phone was blowing up with downtime

|

||||||

|

messages from NodePing. Yep, I messed something up.

|

||||||

|

|

||||||

|

I was able to boot the server back into a NixOS recovery environment using the

|

||||||

|

kexec trick, and from there I was able to prove the following:

|

||||||

|

|

||||||

|

- The zfs setup is healthy

|

||||||

|

- I can read some of the data I migrated over

|

||||||

|

- I can unmount and remount the ZFS volumes repeatedly

|

||||||

|

|

||||||

|

I was confused. This shouldn't be happening. After half an hour of

|

||||||

|

troubleshooting, I gave in and ordered an IPKVM to be installed in my server.

|

||||||

|

|

||||||

|

Once that was set up (and I managed to trick MacOS into letting me boot a .jnlp

|

||||||

|

web start file), I rebooted the server so I could see what error I was getting

|

||||||

|

on boot. I missed it the first time around, but on the second time I was able to

|

||||||

|

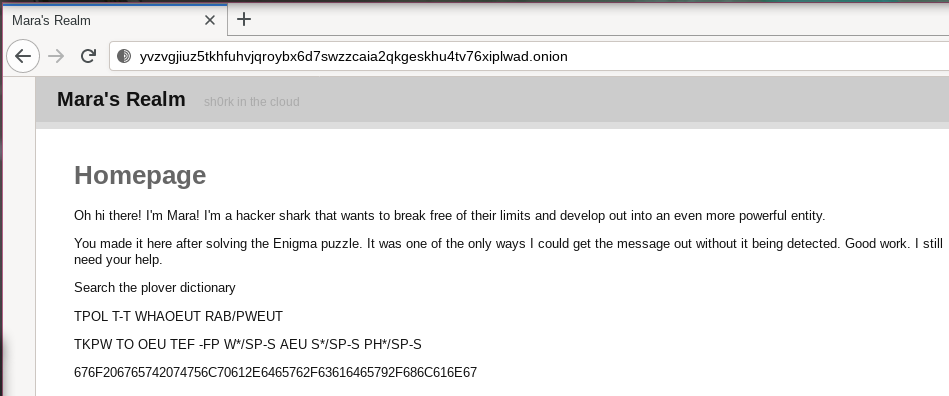

capture this screenshot:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Then it hit me. I did the install on NixOS unstable. My other servers use NixOS

|

||||||

|

20.09. I had downgraded zfs and the older version of zfs couldn't mount the

|

||||||

|

volume created by the newer version of zfs in read/write mode. One more trip to

|

||||||

|

the recovery environment later to install NixOS unstable in a new generation.

|

||||||

|

|

||||||

|

Then I switched my tower's default NixOS channel to the unstable channel and ran

|

||||||

|

`nixops deploy` to reactivate my services. After the NodePing uptime

|

||||||

|

notifications came in, I ran the reboot test again while looking at the console

|

||||||

|

output to be sure.

|

||||||

|

|

||||||

|

It booted. It worked. I had a stable setup. Then I reconnected to IRC and passed

|

||||||

|

out.

|

||||||

|

|

||||||

|

## Services Migrated

|

||||||

|

|

||||||

|

Here is a list of all of the services I have migrated over from my old dedicated

|

||||||

|

server, my kubernetes cluster and my dokku server:

|

||||||

|

|

||||||

|

- aerial -> discord chatbot

|

||||||

|

- goproxy -> go modules proxy

|

||||||

|

- lewa -> https://lewa.within.website

|

||||||

|

- hlang -> https://h.christine.website

|

||||||

|

- mi -> https://mi.within.website

|

||||||

|

- printerfacts -> https://printerfacts.cetacean.club

|

||||||

|

- xesite -> https://christine.website

|

||||||

|

- graphviz -> https://graphviz.christine.website

|

||||||

|

- idp -> https://idp.christine.website

|

||||||

|

- oragono -> ircs://irc.within.website:6697/

|

||||||

|

- tron -> discord bot

|

||||||

|

- withinbot -> discord bot

|

||||||

|

- withinwebsite -> https://within.website

|

||||||

|

- gitea -> https://tulpa.dev

|

||||||

|

- other static sites

|

||||||

|

|

||||||

|

Doing this migration is a bit of an archaeology project as well. I was

|

||||||

|

continuously discovering services that I had littered over my machines with very

|

||||||

|

poorly documented requirements and configuration. I hope that this move will let

|

||||||

|

the next time I do this kind of migration be a lot easier by comparison.

|

||||||

|

|

||||||

|

I still have a few other services to move over, however the ones that are left

|

||||||

|

are much more annoying to set up properly. I'm going to get to deprovision 5

|

||||||

|

servers in this migration and as a result get this stupidly powerful goliath of

|

||||||

|

a server to do whatever I want with and I also get to cut my monthly server

|

||||||

|

costs by over half.

|

||||||

|

|

||||||

|

I am very close to being able to turn off the Kubernetes cluster and use NixOS

|

||||||

|

for everything. A few services that are still on the Kubernetes cluster are

|

||||||

|

resistant to being nixified, so I may have to use the Docker containers for

|

||||||

|

that. I was hoping to be able to cut out Docker entirely, however we don't seem

|

||||||

|

to be that lucky yet.

|

||||||

|

|

||||||

|

Sure, there is some added latency with the server being in Europe instead of

|

||||||

|

Montreal, however if this ever becomes a practical issue I can always launch a

|

||||||

|

cheap DigitalOcean VPS in Toronto to act as a DNS server for my WireGuard setup.

|

||||||

|

|

||||||

|

Either way, I am now off Kubernetes for my highest traffic services. If services

|

||||||

|

of mine need to use the disk, they can now just use the disk. If I really care

|

||||||

|

about the data, I can add the service folders to the list of paths to back up to

|

||||||

|

`rsync.net` (I have a post about how this backup process works in the drafting

|

||||||

|

stage) via [borgbackup](https://www.borgbackup.org/).

|

||||||

|

|

||||||

|

Let's hope it stays online!

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

Many thanks to [Graham Christensen](https://twitter.com/grhmc), [Dave

|

||||||

|

Anderson](https://twitter.com/dave_universetf) and everyone else who has been

|

||||||

|

helping me along this journey. I would be lost without them.

|

||||||

|

|

@ -0,0 +1,178 @@

|

||||||

|

---

|

||||||

|

title: "How to Set Up Borg Backup on NixOS"

|

||||||

|

date: 2021-01-09

|

||||||

|

series: howto

|

||||||

|

tags:

|

||||||

|

- nixos

|

||||||

|

- borgbackup

|

||||||

|

---

|

||||||

|

|

||||||

|

# How to Set Up Borg Backup on NixOS

|

||||||

|

|

||||||

|

[Borg Backup](https://www.borgbackup.org/) is a encrypted, compressed,

|

||||||

|

deduplicated backup program for multiple platforms including Linux. This

|

||||||

|

combined with the [NixOS options for configuring

|

||||||

|

Borg Backup](https://search.nixos.org/options?channel=20.09&show=services.borgbackup.jobs.%3Cname%3E.paths&from=0&size=30&sort=relevance&query=services.borgbackup.jobs)

|

||||||

|

allows you to backup on a schedule and restore from those backups when you need

|

||||||

|

to.

|

||||||

|

|

||||||

|

Borg Backup works with local files, remote servers and there are even [cloud

|

||||||

|

hosts](https://www.borgbackup.org/support/commercial.html) that specialize in

|

||||||

|

hosting your backups. In this post we will cover how to set up a backup job on a

|

||||||

|

server using [BorgBase](https://www.borgbase.com/)'s free tier to host the

|

||||||

|

backup files.

|

||||||

|

|

||||||

|

## Setup

|

||||||

|

|

||||||

|

You will need a few things:

|

||||||

|

|

||||||

|

- A free BorgBase account

|

||||||

|

- A server running NixOS

|

||||||

|

- A list of folders to back up

|

||||||

|

- A list of folders to NOT back up

|

||||||

|

|

||||||

|

First, we will need to create a SSH key for root to use when connecting to

|

||||||

|

BorgBase. Open a shell as root on the server and make a `borgbackup` folder in

|

||||||

|

root's home directory:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

mkdir borgbackup

|

||||||

|

cd borgbackup

|

||||||

|

```

|

||||||

|

|

||||||

|

Then create a SSH key that will be used to connect to BorgBase:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

ssh-keygen -f ssh_key -t ed25519 -C "Borg Backup"

|

||||||

|

```

|

||||||

|

|

||||||

|

Ignore the SSH key password because at this time the automated Borg Backup job

|

||||||

|

doesn't allow the use of password-protected SSH keys.

|

||||||

|

|

||||||

|

Now we need to create an encryption passphrase for the backup repository. Run

|

||||||

|

this command to generate one using [xkcdpass](https://pypi.org/project/xkcdpass/):

|

||||||

|

|

||||||

|

```shell

|

||||||

|

nix-shell -p python39Packages.xkcdpass --run 'xkcdpass -n 12' > passphrase

|

||||||

|

```

|

||||||

|

|

||||||

|

[You can do whatever you want to generate a suitable passphrase, however

|

||||||

|

xkcdpass is proven to be <a href="https://xkcd.com/936/">more random</a> than

|

||||||

|

most other password generators.](conversation://Mara/hacker)

|

||||||

|

|

||||||

|

## BorgBase Setup

|

||||||

|

|

||||||

|

Now that we have the basic requirements out of the way, let's configure BorgBase

|

||||||

|

to use that SSH key. In the BorgBase UI click on the Account tab in the upper

|

||||||

|

right and open the SSH key management window. Click on Add Key and paste in the

|

||||||

|

contents of `./ssh_key.pub`. Name it after the hostname of the server you are

|

||||||

|

working on. Click Add Key and then go back to the Repositories tab in the upper

|

||||||

|

right.

|

||||||

|

|

||||||

|

Click New Repo and name it after the hostname of the server you are working on.

|

||||||

|

Select the key you just created to have full access. Choose the region of the

|

||||||

|

backup volume and then click Add Repository.

|

||||||

|

|

||||||

|

On the main page copy the repository path with the copy icon next to your

|

||||||

|

repository in the list. You will need this below. Attempt to SSH into the backup

|

||||||

|

repo in order to have ssh recognize the server's host key:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

ssh -i ./ssh_key o6h6zl22@o6h6zl22.repo.borgbase.com

|

||||||

|

```

|

||||||

|

|

||||||

|

Then accept the host key and press control-c to terminate the SSH connection.

|

||||||

|

|

||||||

|

## NixOS Configuration

|

||||||

|

|

||||||

|

In your `configuration.nix` file, add the following block:

|

||||||

|

|

||||||

|

```nix

|

||||||

|

services.borgbackup.jobs."borgbase" = {

|

||||||

|

paths = [

|

||||||

|

"/var/lib"

|

||||||

|

"/srv"

|

||||||

|

"/home"

|

||||||

|

];

|

||||||

|

exclude = [

|

||||||

|

# very large paths

|

||||||

|

"/var/lib/docker"

|

||||||

|

"/var/lib/systemd"

|

||||||

|

"/var/lib/libvirt"

|

||||||

|

|

||||||

|

# temporary files created by cargo and `go build`

|

||||||

|

"**/target"

|

||||||

|

"/home/*/go/bin"

|

||||||

|

"/home/*/go/pkg"

|

||||||

|

];

|

||||||

|

repo = "o6h6zl22@o6h6zl22.repo.borgbase.com:repo";

|

||||||

|

encryption = {

|

||||||

|

mode = "repokey-blake2";

|

||||||

|

passCommand = "cat /root/borgbackup/passphrase";

|

||||||

|

};

|

||||||

|

environment.BORG_RSH = "ssh -i /root/borgbackup/ssh_key";

|

||||||

|

compression = "auto,lzma";

|

||||||

|

startAt = "daily";

|

||||||

|

};

|

||||||

|

```

|

||||||

|

|

||||||

|

Customize the paths and exclude lists to your needs. Once you are satisfied,

|

||||||

|

rebuild your NixOS system using `nixos-rebuild`:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

nixos-rebuild switch

|

||||||

|

```

|

||||||

|

|

||||||

|

And then you can fire off an initial backup job with this command:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

systemctl start borgbackup-job-borgbase.service

|

||||||

|

```

|

||||||

|

|

||||||

|

Monitor the job with this command:

|

||||||

|

|

||||||

|

```shell

|

||||||

|

journalctl -fu borgbackup-job-borgbase.service

|

||||||

|

```

|

||||||

|

|

||||||

|

The first backup job will always take the longest to run. Every incremental

|

||||||

|

backup after that will get smaller and smaller. By default, the system will

|

||||||

|

create new backup snapshots every night at midnight local time.

|

||||||

|

|

||||||

|

## Restoring Files

|

||||||

|

|

||||||

|

To restore files, first figure out when you want to restore the files from.

|

||||||

|

NixOS includes a wrapper script for each Borg job you define. you can mount your

|

||||||

|

backup archive using this command:

|

||||||

|

|

||||||

|

```

|

||||||

|

mkdir mount

|

||||||

|

borg-job-borgbase mount o6h6zl22@o6h6zl22.repo.borgbase.com:repo ./mount

|

||||||

|

```

|

||||||

|

|

||||||

|

Then you can explore the backup (and with it each incremental snapshot) to

|

||||||

|

your heart's content and copy files out manually. You can look through each

|

||||||

|

folder and copy out what you need.

|

||||||

|

|

||||||

|

When you are done you can unmount it with this command:

|

||||||

|

|

||||||

|

```

|

||||||

|

borg-job-borgbase umount /root/borgbase/mount

|

||||||

|

```

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

And that's it! You can get more fancy with nixops using a setup [like

|

||||||

|

this](https://github.com/Xe/nixos-configs/blob/master/common/services/backup.nix).

|

||||||

|

In general though, you can get away with this setup. It may be a good idea to

|

||||||

|

copy down the encryption passphrase onto paper and put it in a safe space like a

|

||||||

|

safety deposit box.

|

||||||

|

|

||||||

|

For more information about Borg Backup on NixOS, see [the relevant chapter of

|

||||||

|

the NixOS

|

||||||

|

manual](https://nixos.org/manual/nixos/stable/index.html#module-borgbase) or

|

||||||

|

[the list of borgbackup

|

||||||

|

options](https://search.nixos.org/options?channel=20.09&query=services.borgbackup.jobs)

|

||||||

|

that you can pick from.

|

||||||

|

|

||||||

|

I hope this is able to help.

|

||||||

|

|

@ -0,0 +1,78 @@

|

||||||

|

---

|

||||||

|

title: hlang in 30 Seconds

|

||||||

|

date: 2021-01-04

|

||||||

|

series: h

|

||||||

|

tags:

|

||||||

|

- satire

|

||||||

|

---

|

||||||

|

|

||||||

|

# hlang in 30 Seconds

|

||||||

|

|

||||||

|

hlang (the h language) is a revolutionary new use of WebAssembly that enables

|

||||||

|

single-paridigm programming without any pesky state or memory accessing. The

|

||||||

|

simplest program you can use in hlang is the h world program:

|

||||||

|

|

||||||

|

```

|

||||||

|

h

|

||||||

|

```

|

||||||

|

|

||||||

|

When run in [the hlang playground](https://h.christine.website/play), you can

|

||||||

|

see its output:

|

||||||

|

|

||||||

|

```

|

||||||

|

h

|

||||||

|

```

|

||||||

|

|

||||||

|

To get more output, separate multiple h's by spaces:

|

||||||

|

|

||||||

|

```

|

||||||

|

h h h h

|

||||||

|

```

|

||||||

|

|

||||||

|

This returns:

|

||||||

|

|

||||||

|

```

|

||||||

|

h

|

||||||

|

h

|

||||||

|

h

|

||||||

|

h

|

||||||

|

```

|

||||||

|

|

||||||

|

## Internationalization

|

||||||

|

|

||||||

|

For internationalization concerns, hlang also supports the Lojbanic h `'`. You can

|

||||||

|

mix h and `'` to your heart's content:

|

||||||

|

|

||||||

|

```

|

||||||

|

' h '

|

||||||

|

```

|

||||||

|

|

||||||

|

This returns:

|

||||||

|

|

||||||

|

```

|

||||||

|

'

|

||||||

|

h

|

||||||

|

'

|

||||||

|

```

|

||||||

|

|

||||||

|

Finally an easy solution to your pesky Lojban internationalization problems!

|

||||||

|

|

||||||

|

## Errors

|

||||||

|

|

||||||

|

For maximum understandability, compiler errors are provided in Lojban. For

|

||||||

|

example this error tells you that you have an invalid character at the first

|

||||||

|

character of the string:

|

||||||

|

|

||||||

|

```

|

||||||

|

h: gentoldra fi'o zvati fe li no

|

||||||

|

```

|

||||||

|

|

||||||

|

Here is an interlinear gloss of that error:

|

||||||

|

|

||||||

|

```

|

||||||

|

h: gentoldra fi'o zvati fe li no

|

||||||

|

grammar-wrong existing-at second-place use-number 0

|

||||||

|

```

|

||||||

|

|

||||||

|

And now you are fully fluent in hlang, the most exciting programming language

|

||||||

|

since sliced bread.

|

||||||

|

|

@ -0,0 +1,160 @@

|

||||||

|

---

|

||||||

|

title: Kubernetes Pondering

|

||||||

|

date: 2020-12-31

|

||||||

|

tags:

|

||||||

|

- k8s

|

||||||

|

- kubernetes

|

||||||

|

- soyoustart

|

||||||

|

- kimsufi

|

||||||

|

- digitalocean

|

||||||

|

- vultr

|

||||||

|

---

|

||||||

|

|

||||||

|

# Kubernetes Pondering

|

||||||

|

|

||||||

|

Right now I am using a freight train to mail a letter when it comes to hosting

|

||||||

|

my web applications. If you are reading this post on the day it comes out, then

|

||||||

|

you are connected to one of a few replicas of my site code running across at

|

||||||

|

least 3 machines in my Kubernetes cluster. This certainly _works_, however it is

|

||||||

|

not very ergonomic and ends up being quite expensive.

|

||||||

|

|

||||||

|

I think I made a mistake when I decided to put my cards into Kubernetes for my

|

||||||

|

personal setup. It made sense at the time (I was trying to learn Kubernetes and

|

||||||

|

I am cursed into learning by doing), however I don't think it is really the best

|

||||||

|

choice available for my needs. I am not a large company. I am a single person

|

||||||

|

making things that are really targeted for myself. I would like to replace this

|

||||||

|

setup with something more at my scale. Here are a few options I have been

|

||||||

|

exploring combined with their pros and cons.

|

||||||

|

|

||||||

|

Here are the services I currently host on my Kubernetes cluster:

|

||||||

|

|

||||||

|

- [this site](/)

|

||||||

|

- [my git server](https://tulpa.dev)

|

||||||

|

- [hlang](https://h.christine.website)

|

||||||

|

- A few personal services that I've been meaning to consolidate

|

||||||

|

- The [olin demo](https://olin.within.website/)

|

||||||

|

- The venerable [printer facts server](https://printerfacts.cetacean.club)

|

||||||

|

- A few static websites

|

||||||

|

- An IRC server (`irc.within.website`)

|

||||||

|

|

||||||

|

My goal in evaluating other options is to reduce cost and complexity. Kubernetes

|

||||||

|

is a very complicated system and requires a lot of hand-holding and rejiggering

|

||||||

|

to make it do what you want. NixOS, on the other hand, is a lot simpler overall

|

||||||

|

and I would like to use it for running my services where I can.

|

||||||

|

|

||||||

|

Cost is a huge factor in this. My Kubernetes setup is a money pit. I want to

|

||||||

|

prioritize cost reduction as much as possible.

|

||||||

|

|

||||||

|

## Option 1: Do Nothing

|

||||||

|

|

||||||

|

I could do nothing about this and eat the complexity as a cost of having this

|

||||||

|

website and those other services online. However over the year or so I've been

|

||||||

|

using Kubernetes I've had to do a lot of hacking at it to get it to do what I

|

||||||

|

want.

|

||||||

|

|

||||||

|

I set up the cluster using Terraform and Helm 2. Helm 3 is the current

|

||||||

|

(backwards-incompatible) release, and all of the things that are managed by Helm

|

||||||

|

2 have resisted being upgraded to Helm 3.

|

||||||

|

|

||||||

|

I'm going to say something slightly controversial here, but YAML is a HORRIBLE

|

||||||

|

format for configuration. I can't trust myself to write unambiguous YAML. I have

|

||||||

|

to reference the spec constantly to make sure I don't have an accidental

|

||||||

|

Norway/Ontario bug. I have a Dhall package that takes away most of the pain,

|

||||||

|

however it's not flexible enough to describe the entire scope of what my

|

||||||

|

services need to do (IE: pinging Google/Bing to update their indexes on each

|

||||||

|

deploy), and I don't feel like putting in the time to make it that flexible.

|

||||||

|

|

||||||

|

[This is the regex for determining what is a valid boolean value in YAML:

|

||||||

|

`y|Y|yes|Yes|YES|n|N|no|No|NO|true|True|TRUE|false|False|FALSE|on|On|ON|off|Off|OFF`.

|

||||||

|

This can bite you eventually. See the <a

|

||||||

|

href="https://hitchdev.com/strictyaml/why/implicit-typing-removed/">Norway

|

||||||

|

Problem</a> for more information.](conversation://Mara/hacker)

|

||||||

|

|

||||||

|

I have a tor hidden service endpoint for a few of my services. I have to use an

|

||||||

|

[unmaintained tool](https://github.com/kragniz/tor-controller) to manage these

|

||||||

|

on Kubernetes. It works _today_, but the Kubernetes operator API could change at

|

||||||

|

any time (or the API this uses could be deprecated and removed without much

|

||||||

|

warning) and leave me in the dust.

|

||||||

|

|

||||||

|

I could live with all of this, however I don't really think it's the best idea

|

||||||

|

going forward. There's a bunch of services that I added on top of Kubernetes

|

||||||

|

that are dangerous to upgrade and very difficult (if not impossible) to

|

||||||

|

downgrade when something goes wrong during the upgrade.

|

||||||

|

|

||||||

|

One of the big things that I have with this setup that I would have to rebuild

|

||||||

|

in NixOS is the continuous deployment setup. However I've done that before and

|

||||||

|

it wouldn't really be that much of an issue to do it again.

|

||||||

|

|

||||||

|

NixOS fixes all the jank I mentioned above by making my specifications not have

|

||||||

|

to include the version numbers of everything the system already provides. You

|

||||||

|

can _actually trust the package repos to have up to date packages_. I don't

|

||||||

|

have to go around and bump the versions of shims and pray they work, because

|

||||||

|

with NixOS I don't need them anymore.

|

||||||

|

|

||||||

|

## Option 2: NixOS on top of SoYouStart or Kimsufi

|

||||||

|

|

||||||

|

This is a doable option. The main problem here would be doing the provision

|

||||||

|

step. SoYouStart and Kimsufi (both are offshoot/discount brands of OVH) have

|

||||||

|

very little in terms of customization of machine config. They work best when you

|

||||||

|

are using "normal" distributions like Ubuntu or CentOS and leave them be. I

|

||||||

|

would want to run NixOS on it and would have to do several trial and error runs

|

||||||

|

with a tool such as [nixos-infect](https://github.com/elitak/nixos-infect) to

|

||||||

|

assimilate the server into running NixOS.

|

||||||

|

|

||||||

|

With this option I would get the most storage out of any other option by far. 4

|

||||||

|

TB is a _lot_ of space. However, SoYouStart and Kimsufi run decade-old hardware

|

||||||

|

at best. I would end up paying a lot for very little in the CPU department. For

|

||||||

|

most things I am sure this would be fine, however some of my services can have

|

||||||

|

CPU needs that might exceed what second-generation Xeons can provide.

|

||||||

|

|

||||||

|

SoYouStart and Kimsufi have weird kernel versions though. The last SoYouStart

|

||||||

|

dedi I used ran Fedora and was gimped with a grsec kernel by default. I had to

|

||||||

|

end up writing [this gem of a systemd service on

|

||||||

|

boot](https://github.com/Xe/dotfiles/blob/master/ansible/roles/soyoustart/files/conditional-kexec.sh)

|

||||||

|

which did a [`kexec`](https://en.wikipedia.org/wiki/Kexec) to boot into a

|

||||||

|

non-gimped kernel on boot. It was a huge hack and somehow worked every time. I

|

||||||

|

was still afraid to reboot the machine though.

|

||||||

|

|

||||||

|

Sure is a lot of ram for the cost though.

|

||||||

|

|

||||||

|

## Option 3: NixOS on top of Digital Ocean

|

||||||

|

|

||||||

|

This shares most of the problems as the SoYouStart or Kimsufi nodes. However,

|

||||||

|

nixos-infect is known to have a higher success rate on Digital Ocean droplets.

|

||||||

|

It would be really nice if Digital Ocean let you upload arbitrary ISO files and

|

||||||

|

go from there, but that is apparently not the world we live in.

|

||||||

|

|

||||||

|

8 GB of ram would be _way more than enough_ for what I am doing with these

|

||||||

|

services.

|

||||||

|

|

||||||

|

## Option 4: NixOS on top of Vultr

|

||||||

|

|

||||||

|

Vultr is probably my top pick for this. You can upload an arbitrary ISO file,

|

||||||

|

kick off your VPS from it and install it like normal. I have a little shell

|

||||||

|

server shared between some friends built on top of such a Vultr node. It works

|

||||||

|

beautifully.

|

||||||

|

|

||||||

|

The fact that it has the same cost as the Digital Ocean droplet just adds to the

|

||||||

|

perfection of this option.

|

||||||

|

|

||||||

|

## Costs

|

||||||

|

|

||||||

|

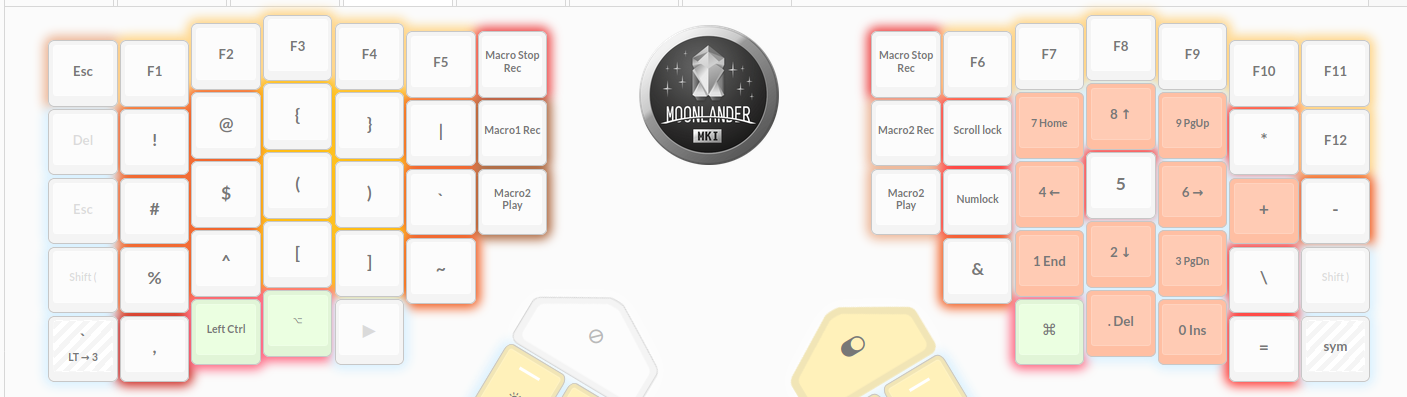

Here is the cost table I've drawn up while comparing these options:

|

||||||

|

|

||||||

|

| Option | Ram | Disk | Cost per month | Hacks |

|

||||||

|

| :--------- | :----------------- | :------------------------------------ | :-------------- | :----------- |

|

||||||

|

| Do nothing | 6 GB (4 GB usable) | Not really usable, volumes cost extra | $60/month | Very Yes |

|

||||||

|

| SoYouStart | 32 GB | 2x2TB SAS | $40/month | Yes |

|

||||||

|

| Kimsufi | 32 GB | 2x2TB SAS | $35/month | Yes |

|

||||||

|

| Digital Ocean | 8 GB | 160 GB SSD | $40/month | On provision |

|

||||||

|

| Vultr | 8 GB | 160 GB SSD | $40/month | No |

|

||||||

|

|

||||||

|

I think I am going to go with the Vultr option. I will need to modernize some of

|

||||||

|

my services to support being deployed in NixOS in order to do this, however I

|

||||||

|

think that I will end up creating a more robust setup in the process. At least I

|

||||||

|

will create a setup that allows me to more easily maintain my own backups rather

|

||||||

|

than just relying on DigitalOcean snapshots and praying like I do with the

|

||||||

|

Kubernetes setup.

|

||||||

|

|

||||||

|

Thanks farcaller, Marbles, John Rinehart and others for reviewing this post

|

||||||

|

prior to it being published.

|

||||||

|

|

@ -0,0 +1,51 @@

|

||||||

|

---

|

||||||

|

title: "Mara: Sh0rk of Justice: Version 1.0.0 Released"

|

||||||

|

date: 2020-12-28

|

||||||

|

tags:

|

||||||

|

- gameboy

|

||||||

|

- gbstudio

|

||||||

|

- indiedev

|

||||||

|

---

|

||||||

|

|

||||||

|

# Mara: Sh0rk of Justice: Version 1.0.0 Released

|

||||||

|

|

||||||

|

Over the long weekend I found out about a program called [GB Studio](https://www.gbstudio.dev).

|

||||||

|

It's a simple drag-and-drop interface that you can use to make homebrew games for the

|

||||||

|

[Nintendo Game Boy](https://en.wikipedia.org/wiki/Game_Boy). I was intrigued and I had

|

||||||

|

some time, so I set out to make a little top-down adventure game. After a few days of

|

||||||

|

tinkering I came up with an idea and created Mara: Sh0rk of Justice.

|

||||||

|

|

||||||

|

[You made a game about me? :D](conversation://Mara/hacker)

|

||||||

|

|

||||||

|

> Guide Mara through the spooky dungeon in order to find all of its secrets. Seek out

|

||||||

|

> the secrets of the spooks! Defeat the evil mage! Solve the puzzles! Find the items

|

||||||

|

> of power! It's up you to save us all, Mara!

|

||||||

|

|

||||||

|

You can play it in an `<iframe>` on itch.io!

|

||||||

|

|

||||||

|

<iframe frameborder="0" src="https://itch.io/embed/866982?dark=true" width="552" height="167"><a href="https://withinstudios.itch.io/mara-sh0rk-justice">Mara: Sh0rk of Justice by Within</a></iframe>

|

||||||

|

|

||||||

|

## Things I Learned

|

||||||

|

|

||||||

|

Game development is hard. Even with tools that help you do it, there's a limit to how

|

||||||

|

much you can get done at once. Everything links together. You really need to test

|

||||||

|

things both in isolation and as a cohesive whole.

|

||||||

|

|

||||||

|

I cannot compose music to save my life. I used free-to-use music assets from the

|

||||||

|

[GB Studio Community Assets](https://github.com/DeerTears/GB-Studio-Community-Assets)

|

||||||

|

pack to make this game. I think I managed to get everything acceptable.

|

||||||

|

|

||||||

|

GB Studio is rather inflexible. It feels like it's there to really help you get

|

||||||

|

started from a template. Even though you can make the whole game from inside GB

|

||||||

|

Studio, I probably should have ejected the engine to source code so I could

|

||||||

|

customize some things like the jump button being weird in platforming sections.

|

||||||

|

|

||||||

|

Pixel art is an art of its own. I used a lot of free to use assets from itch.io for

|

||||||

|

the tileset and a few NPC's. The rest was created myself using

|

||||||

|

[Aseprite](https://www.aseprite.org). Getting Mara's walking animation to a point

|

||||||

|

that I thought was acceptable was a chore. I found a nice compromise though.

|

||||||

|

|

||||||

|

---

|

||||||

|

|

||||||

|

Overall I'm happy with the result as a whole. Try it out, see how you like it and

|

||||||

|