forked from cadey/xesite

Compare commits

122 Commits

| Author | SHA1 | Date |

|---|---|---|

|

|

33b7dfa21c | |

|

|

d7b817b22d | |

|

|

08ccd40888 | |

|

|

3fcbe4ebbf | |

|

|

8759f9d590 | |

|

|

b36afa9dfd | |

|

|

f91a5a7878 | |

|

|

b32f5a25af | |

|

|

18ae8a01f8 | |

|

|

04151593cc | |

|

|

a6ddd7e3a6 | |

|

|

f8dae6fa5e | |

|

|

ed1e8eecee | |

|

|

3020a92086 | |

|

|

d93bdc58a6 | |

|

|

7f6de2cb09 | |

|

|

8b6056fc09 | |

|

|

5d7daf179e | |

|

|

6be8b24dd2 | |

|

|

03fa2e33a1 | |

|

|

bf95a3f7e0 | |

|

|

70b2cc5408 | |

|

|

b5b804e083 | |

|

|

7828552cca | |

|

|

000ed82301 | |

|

|

817d426314 | |

|

|

13f28eae67 | |

|

|

a1974a5948 | |

|

|

15a130cc3d | |

|

|

9f977b3882 | |

|

|

50dcfbb19d | |

|

|

aeab6536e0 | |

|

|

78485b7d49 | |

|

|

cea1e230da | |

|

|

3a592f99db | |

|

|

d0c2df003b | |

|

|

ad6fba4c79 | |

|

|

7541df7781 | |

|

|

5c09a72207 | |

|

|

792be1eb55 | |

|

|

aa10dce9ee | |

|

|

aca8b80892 | |

|

|

dc3f6471e7 | |

|

|

396150f72b | |

|

|

2645966f85 | |

|

|

7d667ef2ae | |

|

|

7bf1f82c90 | |

|

|

1bb8e9302c | |

|

|

a57512d083 | |

|

|

c4cf9c156a | |

|

|

60196d90ed | |

|

|

7c7904c17c | |

|

|

7cf1429afa | |

|

|

554dbbb53a | |

|

|

2d00c19205 | |

|

|

bdc64f78f2 | |

|

|

ff64215d07 | |

|

|

a12c957abe | |

|

|

71c33c083a | |

|

|

ac12ebf063 | |

|

|

ffab774e6a | |

|

|

f8e2212549 | |

|

|

356f12cbdc | |

|

|

06b00eb7c3 | |

|

|

b1277d209d | |

|

|

901a306002 | |

|

|

e545abeb1a | |

|

|

08d96305af | |

|

|

019af1d532 | |

|

|

7c920ca5e9 | |

|

|

8c88f66c67 | |

|

|

d4145bd406 | |

|

|

e080361dd4 | |

|

|

ba49eb8f12 | |

|

|

00d101b393 | |

|

|

b53cd41193 | |

|

|

09aba58ebd | |

|

|

230df6f54e | |

|

|

565949cec2 | |

|

|

e2b9f384bf | |

|

|

0e22f4c224 | |

|

|

62014b2dba | |

|

|

62dc15c339 | |

|

|

2432b5a4fc | |

|

|

7a3d64fec1 | |

|

|

b3bdb9388a | |

|

|

c5cbcc47ec | |

|

|

c5ff939007 | |

|

|

c55ef48ba3 | |

|

|

bdc85b1536 | |

|

|

b1264c8b69 | |

|

|

1d0066eeda | |

|

|

43a542e192 | |

|

|

7e92f3c7a9 | |

|

|

737b4700a0 | |

|

|

22ab51b29c | |

|

|

b312b9931d | |

|

|

e057c81325 | |

|

|

fd6d806365 | |

|

|

e351243126 | |

|

|

eee6502aa1 | |

|

|

c07ac3b6c0 | |

|

|

06d4bf7d69 | |

|

|

261d0b65df | |

|

|

628b572455 | |

|

|

6be7bd7613 | |

|

|

cd1ce7785a | |

|

|

a257536b58 | |

|

|

add0fa3e37 | |

|

|

e03e1712b1 | |

|

|

dacc7159d7 | |

|

|

021f70fd90 | |

|

|

f7aa184c20 | |

|

|

1dfd47708f | |

|

|

4d05bd7347 | |

|

|

22c29ce498 | |

|

|

1019e42fa7 | |

|

|

4170e3b78c | |

|

|

ad2f5c739f | |

|

|

05135edcbe | |

|

|

9566b790bc | |

|

|

67de839da8 |

File diff suppressed because it is too large

Load Diff

18

Cargo.toml

18

Cargo.toml

|

|

@ -9,13 +9,14 @@ repository = "https://github.com/Xe/site"

|

|||

# See more keys and their definitions at https://doc.rust-lang.org/cargo/reference/manifest.html

|

||||

|

||||

[dependencies]

|

||||

axum = "0.5"

|

||||

axum = { version = "0.5", features = ["headers"] }

|

||||

axum-macros = "0.2"

|

||||

axum-extra = "0.2"

|

||||

axum-extra = "0.3"

|

||||

color-eyre = "0.6"

|

||||

chrono = "0.4"

|

||||

comrak = "0.12.1"

|

||||

comrak = "0.14.0"

|

||||

derive_more = "0.99"

|

||||

dirs = "4"

|

||||

envy = "0.4"

|

||||

estimated_read_time = "1"

|

||||

futures = "0.3"

|

||||

|

|

@ -26,11 +27,14 @@ hyper = "0.14"

|

|||

kankyo = "0.3"

|

||||

lazy_static = "1.4"

|

||||

log = "0.4"

|

||||

lol_html = "0.3"

|

||||

maud = { version = "0.23.0", features = ["axum"] }

|

||||

mime = "0.3.0"

|

||||

prometheus = { version = "0.13", default-features = false, features = ["process"] }

|

||||

rand = "0"

|

||||

regex = "1"

|

||||

reqwest = { version = "0.11", features = ["json"] }

|

||||

serde_dhall = "0.11.0"

|

||||

serde_dhall = "0.11.2"

|

||||

serde = { version = "1", features = ["derive"] }

|

||||

serde_yaml = "0.8"

|

||||

sitemap = "0.4"

|

||||

|

|

@ -44,9 +48,11 @@ xml-rs = "0.8"

|

|||

url = "2"

|

||||

uuid = { version = "0.8", features = ["serde", "v4"] }

|

||||

|

||||

xesite_types = { path = "./lib/xesite_types" }

|

||||

|

||||

# workspace dependencies

|

||||

cfcache = { path = "./lib/cfcache" }

|

||||

jsonfeed = { path = "./lib/jsonfeed" }

|

||||

xe_jsonfeed = { path = "./lib/jsonfeed" }

|

||||

mi = { path = "./lib/mi" }

|

||||

patreon = { path = "./lib/patreon" }

|

||||

|

||||

|

|

@ -55,7 +61,7 @@ version = "0.4"

|

|||

features = [ "full" ]

|

||||

|

||||

[dependencies.tower-http]

|

||||

version = "0.2"

|

||||

version = "0.3"

|

||||

features = [ "full" ]

|

||||

|

||||

# os-specific dependencies

|

||||

|

|

|

|||

|

|

@ -7,7 +7,7 @@ tags:

|

|||

---

|

||||

|

||||

[*Last time in the christine dot website cinematic

|

||||

universe:*](https://christine.website/blog/unix-domain-sockets-2021-04-01)

|

||||

universe:*](https://xeiaso.net/blog/unix-domain-sockets-2021-04-01)

|

||||

|

||||

*Unix sockets started to be used to grace the cluster. Things were at peace.

|

||||

Then, a realization came through:*

|

||||

|

|

|

|||

|

|

@ -0,0 +1,65 @@

|

|||

---

|

||||

title: "My Stance on Toxicity About Programming Languages"

|

||||

date: 2022-05-23

|

||||

tags:

|

||||

- toxicity

|

||||

- culture

|

||||

---

|

||||

|

||||

I have been toxic and hateful in the past about programming language choice. I

|

||||

now realize this is a mistake. I am sorry if my being toxic about programming

|

||||

languages has harmed you.

|

||||

|

||||

By toxic, I mean doing things or saying things that imply people are lesser for

|

||||

having different experience and preferences about programming languages. I have

|

||||

seen people imply that using languages like PHP or Node.js means that they are

|

||||

idiots or similar. This is toxic behavior and I do not want to be a part of it

|

||||

in the future.

|

||||

|

||||

I am trying to not be toxic about programming languages in the future. Each

|

||||

programming language is made to solve the tasks it was designed to solve and

|

||||

being toxic about it helps nobody. By being toxic about programming languages

|

||||

like this, I only serve to spread toxicity and then see it be repeated as the

|

||||

people that look up to me as a role model will then strive to repeat my

|

||||

behavior. This cannot continue. I do not want my passion projects to become

|

||||

synonymous with toxicity and vitriol. I do not want to be known as the person

|

||||

that hates $PROGRAMMING_LANGUAGE. I want to break the cycle.

|

||||

|

||||

With this, I want to confirm that I will not write any more attack articles

|

||||

about programming languages. I am doing my best to ensure that this will also

|

||||

spread to my social media actions, conference talks and as many other things as

|

||||

I can.

|

||||

|

||||

I challenge all of you readers to take this challenge too. Don't spread toxicity

|

||||

about programming languages. All of the PHP hate out there is a classic example

|

||||

of this. PHP is a viable programming language that is used by a large percentage

|

||||

of the internet. By insinuating that everyone using PHP is inferior (or worse)

|

||||

you only serve to push people away and worst case cause them to be toxic about

|

||||

the things you like. Toxicity breeds toxicity and the best way to stop it is to

|

||||

be the one to break the cycle and have others follow in your footsteps.

|

||||

|

||||

I have been incredibly toxic about PHP in the past. PHP is one of if not the

|

||||

most widely used programming languages for writing applications that run on a

|

||||

web server. Its design makes it dead simple to understand how incoming HTTP

|

||||

requests relate to files on the disk. There is no compile step. The steps to

|

||||

make a change are to open the file on the server, make the change you want to

|

||||

see and press F5. This is a developer experience that is unparalleled in most

|

||||

HTTP frameworks that I've seen in other programming environments. PHP users

|

||||

deserve better than to be hated on. PHP is an incredibly valid choice and I'm

|

||||

sure that with the right linters and human review in the mix it can be as secure

|

||||

as "properly written" services in Go, Java and Rust.

|

||||

|

||||

<xeblog-conv name="Cadey" mood="enby">Take the "don't be toxic about programming

|

||||

languages" challenge! Just stop with the hate, toxicity and vitriol. Our jobs

|

||||

are complicated enough already. Being toxic to eachother about how we decide to

|

||||

solve problems is a horrible life decision at the least and actively harmful to

|

||||

people's careers at most. Just stop.</xeblog-conv>

|

||||

|

||||

---

|

||||

|

||||

<xeblog-conv name="Mara" mood="hacker">This post is not intended as a sub-blog.

|

||||

If you feel that this post is calling you out, please don't take this

|

||||

personally. There is a lot of toxicity out there and it will take a long time to

|

||||

totally disarm it, even with people dedicated to doing it. This is an adaptation

|

||||

of [this twitter

|

||||

thread](https://twitter.com/theprincessxena/status/1527765025561186304).</xeblog-conv>

|

||||

|

|

@ -0,0 +1,175 @@

|

|||

---

|

||||

title: "Anbernic Win600 First Impressions"

|

||||

date: 2022-07-14

|

||||

series: reviews

|

||||

---

|

||||

|

||||

Right now PC gaming is largely a monopoly centered around Microsoft Windows.

|

||||

Many PC games only support Windows and a large fraction of them use technical

|

||||

means to prevent gamers on other platforms from playing those games. In 2021,

|

||||

Valve introduced the [Steam Deck](https://www.steamdeck.com/en/) as an

|

||||

alternative to that monopoly. There's always been a small, underground market

|

||||

for handheld gaming PCs that let you play PC games on the go, but it's always

|

||||

been a very niche market dominated by a few big players that charge a lot of

|

||||

money relative to the game experience they deliver. The Steam Deck radically

|

||||

changed this equation and it's still on backorder to this day. This has made

|

||||

other manufacturers take notice and one of them was Anbernic.

|

||||

|

||||

[Anbernic](https://anbernic.com/) is a company that specializes in making retro

|

||||

emulation handheld gaming consoles. Recently they released their

|

||||

[Win600](https://anbernic.com/products/new-anbernic-win600) handheld. It has a

|

||||

Radeon Silver 3020e or a Radeon Silver 3050e and today I am going to give you my

|

||||

first impressions of it. I have the 3050e version.

|

||||

|

||||

<xeblog-conv name="Mara" mood="happy">A review of the Steam Deck is coming up

|

||||

soon!</xeblog-conv>

|

||||

|

||||

One of the real standout features of this device is that Anbernic has been

|

||||

working with Valve to allow people to run SteamOS on it! This makes us all one

|

||||

step closer to having a viable competitor to Windows for gaming. SteamOS is

|

||||

fantastic and has revolutionized gaming on Linux. It's good to see it coming to

|

||||

more devices.

|

||||

|

||||

## Out of the box

|

||||

|

||||

I ordered my Win600 about 3 hours after sales opened. It arrived in a week and

|

||||

came in one of those china-spec packages made out of insulation. If you've ever

|

||||

ordered things from AliExpress you know what I'm talking about. It's just a

|

||||

solid mass of insulation.

|

||||

|

||||

[](https://cdn.xeiaso.net/file/christine-static/img/FXozAEjUsAQEg9d.jpeg)

|

||||

|

||||

The unboxing experience was pretty great. The console came with:

|

||||

|

||||

* The console

|

||||

* A box containing the charger and cable

|

||||

* A slip of paper telling you how to set up windows without a wifi connection

|

||||

* A screen protector and cleaning cloth (my screen protector was broken in the

|

||||

box, so much for all that insulation lol)

|

||||

* A user manual that points out obvious things about your device

|

||||

|

||||

One of the weirder things about this device is the mouse/gamepad slider on the

|

||||

side. It changes the USB devices on the system and makes the gamepad either act

|

||||

like an xinput joypad or a mouse and keyboard. The mouse and keyboard controls

|

||||

are strange. Here are the controls I have discovered so far:

|

||||

|

||||

* R1 is right click

|

||||

* L1 is left click

|

||||

* A is enter

|

||||

* The right stick very slowly skitters the mouse around the screen

|

||||

* The left stick is a super aggressive scroll wheel

|

||||

|

||||

Figuring out these controls on the fly without any help from the manual meant

|

||||

that I had taken long enough in the setup screen that [Cortana started to pipe

|

||||

up](https://youtu.be/yn6bSm9HXFg) and guided me through the setup process. This

|

||||

was not fun. I had to connect an external keyboard to finish setup.

|

||||

|

||||

<xeblog-conv name="Cadey" mood="coffee">This is probably not Anbernic's fault.

|

||||

Windows is NOT made for smaller devices like this and oh god it

|

||||

shows.</xeblog-conv>

|

||||

|

||||

## Windows 10 "fun"

|

||||

|

||||

There is also a keyboard button on the side. When you are using windows this

|

||||

button summons a soft keyboard. Not the nice to use modern soft keyboard though,

|

||||

the legacy terrible soft keyboard that Microsoft has had for forever and never

|

||||

really updated. Using it is grating, like rubbing sandpaper all over your hands.

|

||||

This made entering in my Wi-Fi password an adventure. It took my husband and I

|

||||

15 minutes to get the device to connect to Wi-Fi. 15 minutes to connect to

|

||||

Wi-Fi.

|

||||

|

||||

Once it was connected to Wi-Fi, I tried to update the system to the latest

|

||||

version of windows. The settings update crashed. Windows Update's service also

|

||||

crashed. Windows Update also randomly got stuck trying to start the installation

|

||||

process for updates. Once updates worked and finished installing, I rebooted.

|

||||

|

||||

I tried to clean up the taskbar by disabling all of the random icons that

|

||||

product managers at Microsoft want you to see. The Cortana button was stuck on

|

||||

and I was unable to disable it. Trying to hide the Windows meet icon crashed

|

||||

explorer.exe. I don't know what part of this is Windows going out of its way to

|

||||

mess with me (I'm cursed) and what part of it is Windows really not being

|

||||

optimized for this hardware in any sense of the way.

|

||||

|

||||

Windows is really painful on this device. It's obvious that Windows was not made

|

||||

with this device in mind. There are buttons to hack around this (but not as far

|

||||

as the task manager button I've seen on other handhelds), but overall trying to

|

||||

use Windows with a game console is like trying to saw a log with a pencil

|

||||

sharpener. It's just the wrong tool for the job. Sure you _can_ do it, but _can_

|

||||

and _should_ are different words in English.

|

||||

|

||||

Another weird thing about Windows on this device is that the screen only reports

|

||||

a single display mode: 1280x720. It has no support for lower resolutions to run

|

||||

older games that only work on those lower resolutions. In most cases this will

|

||||

be not an issue, but if you want to lower the resolution of a game to squeeze

|

||||

more performance out then you may have issues.

|

||||

|

||||

## Steam

|

||||

|

||||

In a moment of weakness, I decided to start up Steam. Steam defaulted to Big

|

||||

Picture mode and its first-time-user-experience made me set up Wi-Fi again.

|

||||

There was no way to bypass it. I got out my moonlander again and typed in my

|

||||

Wi-Fi password again, and then I downloaded Sonic Adventure 2 as a test for how

|

||||

games feel on it. Sonic Adventure 2 is a very lightweight game (you can play it

|

||||

for like 6.5 hours on a full charge of the Steam Deck) and I've played it to

|

||||

_death_ over the years. I know how the game _should_ feel.

|

||||

|

||||

[](https://cdn.xeiaso.net/file/christine-static/img/FXpIjhwUIAUEujg.jpeg)

|

||||

|

||||

City Escape ran at a perfect 60 FPS at the device's native resolution. The main

|

||||

thing I noticed though was the position of the analog sticks. Based on the

|

||||

design of the device, I'm pretty sure they were going for something with a

|

||||

PlayStation DualShock 4 layout with the action buttons on the top and the sticks

|

||||

on the bottom. The sticks are too far down on the device. Playing Sonic

|

||||

Adventure 2 was kind of painful.

|

||||

|

||||

## SteamOS

|

||||

|

||||

So I installed SteamOS on the device. Besides a weird issue with 5 GHZ Wi-Fi not

|

||||

working and updates requiring me to reboot the device IMMEDIATELY after

|

||||

connecting to Wi-Fi, it works great. I can install games and they run. The DPI

|

||||

for SteamOS is quite wrong though. All the UI elements are painfully small. For

|

||||

comparison, I put my Steam Deck on the same screen as I had on the Win600. The

|

||||

Steam Deck is on top and the Win600 is on the bottom.

|

||||

|

||||

[](https://f001.backblazeb2.com/file/christine-static/img/FXqSz_tVsAAU0wf.jpeg)

|

||||

|

||||

Yeah. It leaves things to be desired.

|

||||

|

||||

When I had SteamOS set up, I did find something that makes the Win600 slightly

|

||||

better than the Steam Deck. When you are adding games to Steam with Emulation

|

||||

Station you need to close the Steam client to edit the leveldb files that Steam

|

||||

uses to track what games you can launch. On the Steam Deck, the Steam client

|

||||

also enables the built-in controllers to act as a keyboard and mouse. This means

|

||||

that you need to poke around and pray with the touchscreen to get EmuDeck games

|

||||

up and running. The mouse/controller switch on the Win600 makes this slightly

|

||||

more convenient because the controllers can always poorly act as a mouse and

|

||||

keyboard.

|

||||

|

||||

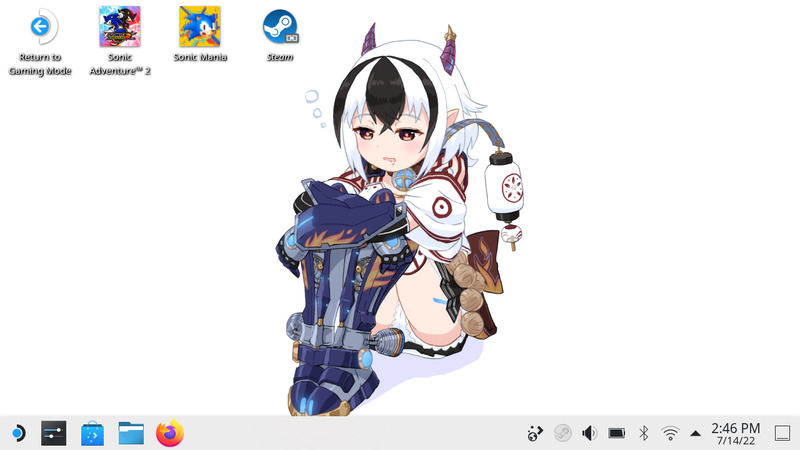

When you are in KDE on the Win600, you don't get a soft keyboard at all. This is

|

||||

mildly inconvenient, but can be fixed with the moonlander yet again. Here's a

|

||||

screenshot of what my KDE desktop on the Win600 looks like:

|

||||

|

||||

[](https://f001.backblazeb2.com/file/christine-static/img/Screenshot_20220714_144655.png)

|

||||

|

||||

Overall, SteamOS is a lot more ergonomic in my opinion and will let you play

|

||||

games to your heart's content.

|

||||

|

||||

The D-pad feels really good. I love how it responds. When I did a little bit of

|

||||

Sonic Mania I never felt like I was inaccurate. There were some weird audio

|

||||

hitches on Sonic Mania though where the music would cut out randomly. Not sure

|

||||

what's going on with that. I could play through entire Pokemon games with that

|

||||

D-pad.

|

||||

|

||||

## Conclusions for now

|

||||

|

||||

Overall I'm getting the feeling that this device is _okay_. It's not great, it's

|

||||

not terrible, but it's okay. I need to get some more experience with it, but so

|

||||

far it seems that this device really does have a weight class and oh god if you

|

||||

play a game outside its weight class your UX goes to shit instantly. The battery

|

||||

life leaves _a lot_ to be desired so far. However it does work. It's hard to not

|

||||

compare this to the Steam Deck, but it's so much less in comparison to the Steam

|

||||

Deck.

|

||||

|

||||

I don't know how I feel about this device. I'm not sure it's worth the money. I

|

||||

need to get more experience with it. I'll have a better sense of all this when I

|

||||

write my full review. Stay tuned for that!

|

||||

|

|

@ -21,7 +21,7 @@ up being the _worst_ experience that I have using an aarch64 MacBook.

|

|||

[This website](https://github.com/Xe/site) is a fairly complicated webapp

|

||||

written in Rust. As such it makes for a fairly decent compile stress test. I'm

|

||||

going to do a compile test against my [Ryzen

|

||||

3600](https://christine.website/blog/nixos-desktop-flow-2020-04-25) with this M1

|

||||

3600](https://xeiaso.net/blog/nixos-desktop-flow-2020-04-25) with this M1

|

||||

MacBook Air.

|

||||

|

||||

My tower is running this version of Rust:

|

||||

|

|

|

|||

|

|

@ -181,7 +181,7 @@ server, my kubernetes cluster and my dokku server:

|

|||

- hlang -> https://h.christine.website

|

||||

- mi -> https://mi.within.website

|

||||

- printerfacts -> https://printerfacts.cetacean.club

|

||||

- xesite -> https://christine.website

|

||||

- xesite -> https://xeiaso.net

|

||||

- graphviz -> https://graphviz.christine.website

|

||||

- idp -> https://idp.christine.website

|

||||

- oragono -> ircs://irc.within.website:6697/

|

||||

|

|

|

|||

|

|

@ -0,0 +1,125 @@

|

|||

---

|

||||

title: "Site Update: The Big Domain Move To xeiaso.net"

|

||||

date: 2022-05-28

|

||||

tags:

|

||||

- dns

|

||||

---

|

||||

|

||||

Hello all!

|

||||

|

||||

If you take a look in the URL bar of your browser (or on the article URL section

|

||||

of your feed reader), you should see that there is a new domain name! Welcome to

|

||||

[xeiaso.net](https://xeiaso.net)!

|

||||

|

||||

Hopefully nothing broke in the process of moving things over, I tried to make

|

||||

sure that everything would forward over and today I'm going to explain how I did

|

||||

that.

|

||||

|

||||

I have really good SEO on my NixOS articles, and for my blog in general. I did

|

||||

not want to risk tanking that SEO when I moved domain names, so I have been

|

||||

putting this off for the better part of a year. As for why now? I got tired of

|

||||

internets complaning that the URL was "christine dot website" when I wanted to

|

||||

be called "Xe". Now you have no excuse.

|

||||

|

||||

So the first step was to be sure that everything got forwarded over to the new

|

||||

domain. After buying the domain name and setting everything up in Cloudflare

|

||||

(including moving my paid plan over), I pointed the new domain at my server and

|

||||

then set up a new NixOS configuration block to have that domain name point to my

|

||||

site binary:

|

||||

|

||||

```nix

|

||||

services.nginx.virtualHosts."xeiaso.net" = {

|

||||

locations."/" = {

|

||||

proxyPass = "http://unix:${toString cfg.sockPath}";

|

||||

proxyWebsockets = true;

|

||||

};

|

||||

forceSSL = cfg.useACME;

|

||||

useACMEHost = "xeiaso.net";

|

||||

extraConfig = ''

|

||||

access_log /var/log/nginx/xesite.access.log;

|

||||

'';

|

||||

};

|

||||

```

|

||||

|

||||

After that was working, I then got a list of all the things that probably

|

||||

shouldn't be redirected from. In most cases, most HTTP clients should do the

|

||||

right thing when getting a permanent redirect to a new URL. However, we live in

|

||||

a fallen world where we cannot expect clients to do the right thing. Especially

|

||||

RSS feed readers.

|

||||

|

||||

So I made a list of all the things that I was afraid to make permanent redirects

|

||||

for and here it is:

|

||||

|

||||

* `/jsonfeed` - a JSONFeed package for Go

|

||||

([docs](https://pkg.go.dev/christine.website/jsonfeed)), I didn't want to

|

||||

break builds by issuing a permanent redirect that would not match the

|

||||

[go.mod](https://tulpa.dev/Xe/jsonfeed/src/branch/master/go.mod) file.

|

||||

* `/.within/health` - the healthcheck route used by monitoring. I didn't want to

|

||||

find out if NodePing blew up on a 301.

|

||||

* `/.within/website.within.xesite/new_post` - the URL used by the [Android

|

||||

app](https://play.google.com/store/apps/details?id=website.christine.xesite)

|

||||

widget to let you know when a new post is published. I didn't want to find out

|

||||

if Android's HTTP library handles redirects properly or not.

|

||||

* `/blog.rss` - RSS feed readers are badly implemented. I didn't want to find

|

||||

out if it would break people's feeds entirely. I actually care about people

|

||||

that read this blog over RSS and I'm sad that poorly written feed readers

|

||||

punish this server so much.

|

||||

* `/blog.atom` - See above.

|

||||

* `/blog.json` - See above.

|

||||

|

||||

Now that I have the list of URLs to not forward, I can finally write the small

|

||||

bit of Nginx config that will set up permanent forwards (HTTP status code 301)

|

||||

for every link pointing to the old domain. It will look something like this:

|

||||

|

||||

```nginx

|

||||

location / {

|

||||

return 301 https://xeiaso.net$request_uri;

|

||||

}

|

||||

```

|

||||

|

||||

<xeblog-conv name="Mara" mood="hacker">Note that it's using `$request_uri` and

|

||||

not just `$uri`. If you use `$uri` you run the risk of [CRLF

|

||||

injection](https://reversebrain.github.io/2021/03/29/The-story-of-Nginx-and-uri-variable/),

|

||||

which will allow any random attacker to inject HTTP headers into incoming

|

||||

requests. This is not a good thing to have happen, to say the

|

||||

least.</xeblog-conv>

|

||||

|

||||

So I wrote a little bit of NixOS config that automatically bridges the gap:

|

||||

|

||||

```nix

|

||||

services.nginx.virtualHosts."christine.website" = let proxyOld = {

|

||||

proxyPass = "http://unix:${toString cfg.sockPath}";

|

||||

proxyWebsockets = true;

|

||||

}; in {

|

||||

locations."/jsonfeed" = proxyOld;

|

||||

locations."/.within/health" = proxyOld;

|

||||

locations."/.within/website.within.xesite/new_post" = proxyOld;

|

||||

locations."/blog.rss" = proxyOld;

|

||||

locations."/blog.atom" = proxyOld;

|

||||

locations."/blog.json" = proxyOld;

|

||||

locations."/".extraConfig = ''

|

||||

return 301 https://xeiaso.net$request_uri;

|

||||

'';

|

||||

forceSSL = cfg.useACME;

|

||||

useACMEHost = "christine.website";

|

||||

extraConfig = ''

|

||||

access_log /var/log/nginx/xesite_old.access.log;

|

||||

'';

|

||||

};

|

||||

```

|

||||

|

||||

This will point all the scary paths to the site itself and have

|

||||

`https://christine.website/whatever` get forwarded to

|

||||

`https://xeiaso.net/whatever`, this makes sure that every single link that

|

||||

anyone has ever posted will get properly forwarded. This makes link rot

|

||||

literally impossible, and helps ensure that I keep my hard-earned SEO.

|

||||

|

||||

I also renamed my email address to `me@xeiaso.net`. Please update your address

|

||||

books and spam filters accordingly. Also update my name to `Xe Iaso` if you

|

||||

haven't already.

|

||||

|

||||

I've got some projects in the back burner that will make this blog even better!

|

||||

Stay tuned and stay frosty.

|

||||

|

||||

What was formerly known as the "christine dot website cinematic universe" is now

|

||||

known as the "xeiaso dot net cinematic universe".

|

||||

|

|

@ -78,7 +78,7 @@ for this:

|

|||

|

||||

```

|

||||

Xe Iaso (zi ai-uh-so)

|

||||

https://christine.website

|

||||

https://xeiaso.net

|

||||

|

||||

.i la budza pu cusku lu

|

||||

<<.i ko snura .i ko kanro

|

||||

|

|

|

|||

|

|

@ -36,7 +36,7 @@ Your website should include at least the following things:

|

|||

- Links to or words about projects of yours that you are proud of

|

||||

- Some contact information (an email address is a good idea too)

|

||||

|

||||

If you feel comfortable doing so, I'd also suggest putting your [resume](https://christine.website/resume)

|

||||

If you feel comfortable doing so, I'd also suggest putting your [resume](https://xeiaso.net/resume)

|

||||

on this site too. Even if it's just got your foodservice jobs or education

|

||||

history (including your high school diploma if need be).

|

||||

|

||||

|

|

@ -47,7 +47,7 @@ not.

|

|||

## Make a Tech Blog On That Site

|

||||

|

||||

This has been the single biggest thing to help me grow professionally. I regularly

|

||||

put [articles](https://christine.website/blog) on my blog, sometimes not even about

|

||||

put [articles](https://xeiaso.net/blog) on my blog, sometimes not even about

|

||||

technology topics. Even if you are writing about your take on something people have

|

||||

already written about, it's still good practice. Your early posts are going to be

|

||||

rough. It's normal to not be an expert when starting out in a new skill.

|

||||

|

|

|

|||

|

|

@ -54,7 +54,7 @@ by it. That attempt to come out failed and I was put into Christian

|

|||

writing down my thoughts in a journal to this day.

|

||||

|

||||

So that day I hit "send" on [the

|

||||

email](https://christine.website/blog/coming-out-2015-12-01) was mortally

|

||||

email](https://xeiaso.net/blog/coming-out-2015-12-01) was mortally

|

||||

terrifying. All that fear from so long ago came raging up to the surface and I

|

||||

was left in a crying and vulnerable state. However it ended up being a good kind

|

||||

of cry, the healing kind.

|

||||

|

|

|

|||

|

|

@ -21,7 +21,7 @@ named [dyson][dyson] in order to help me manage Terraform as well as create

|

|||

Kubernetes manifests from [a template][template]. This works for the majority of

|

||||

my apps, but it is difficult to extend at this point for a few reasons:

|

||||

|

||||

[cultk8s]: https://christine.website/blog/the-cult-of-kubernetes-2019-09-07

|

||||

[cultk8s]: https://xeiaso.net/blog/the-cult-of-kubernetes-2019-09-07

|

||||

[dyson]: https://github.com/Xe/within-terraform/tree/master/dyson

|

||||

[template]: https://github.com/Xe/within-terraform/blob/master/dyson/src/dysonPkg/deployment_with_ingress.yaml

|

||||

|

||||

|

|

|

|||

|

|

@ -0,0 +1,205 @@

|

|||

---

|

||||

title: Writing Coherently At Scale

|

||||

date: 2022-06-29

|

||||

tags:

|

||||

- writing

|

||||

vod:

|

||||

youtube: https://youtu.be/pDOoqqu06-8

|

||||

twitch: https://www.twitch.tv/videos/1513874389

|

||||

---

|

||||

|

||||

As someone who does a lot of writing, I have been asked how to write about

|

||||

things. I have been asked about it enough that I am documenting this here so you

|

||||

can all understand my process. This is not a prescriptive system that you must

|

||||

do in order to make Quality Content™️, this is what I do.

|

||||

|

||||

<xeblog-conv name="Cadey" mood="coffee">I honestly have no idea if this is a

|

||||

"correct" way of doing things, but it seems to work well enough. Especially so

|

||||

if you are reading this.</xeblog-conv>

|

||||

|

||||

<xeblog-hero file="great-wave-cyberpunk" prompt="the great wave off of kanagawa, cyberpunk, hanzi inscription"></xeblog-hero>

|

||||

|

||||

## The Planning Phase

|

||||

|

||||

To start out the process of writing about something, I usually like to start

|

||||

with the end goal in mind. If I am writing about an event or technology thing,

|

||||

I'll start out with a goal that looks something like this:

|

||||

|

||||

> Explain a split DNS setup and its advantages and weaknesses so that people can

|

||||

> make more informed decisions about their technical setups.

|

||||

|

||||

It doesn't have to be very complicated or intricate. Most of the complexity

|

||||

comes up naturally during the process of writing the intermediate steps. Think

|

||||

about the end goal or what you want people to gain from reading the article.

|

||||

|

||||

I've also found it helps to think about the target audience and assumed skills

|

||||

of the reader. I'll usually list out the kind of person that would benefit from

|

||||

this the most and how it will help them. Here's an example:

|

||||

|

||||

> The reader is assumed to have some context about what DNS is and wants to help

|

||||

> make their production environment more secure, but isn't totally clear on how

|

||||

> it helps and what tradeoffs are made.

|

||||

|

||||

State what the reader is to you and how the post benefits them. Underthink it.

|

||||

It's tempting to overthink this, but really don't. You can overthink the

|

||||

explanations later.

|

||||

|

||||

### The Outline

|

||||

|

||||

Once I have an end goal and the target audience in mind, then I make an outline

|

||||

of what I want the post to contain. This outline will have top level items for

|

||||

generic parts of the article or major concepts/steps and then I will go in and

|

||||

add more detail inside each top level item. Here is an example set of top level

|

||||

items for that split DNS post:

|

||||

|

||||

```markdown

|

||||

- Introduction

|

||||

- Define split DNS

|

||||

- How split DNS is different

|

||||

- Where you can use split DNS

|

||||

- Advantages of split DNS

|

||||

- Tradeoffs of split DNS

|

||||

- Conclusion

|

||||

```

|

||||

|

||||

Each step should build on the last and help you reach towards the end goal.

|

||||

|

||||

After I write the top level outline, I start drilling down into more detail. As

|

||||

I drill down into more detail about a thing, the bullet points get nested

|

||||

deeper, but when topics change then I go down a line. Here's an example:

|

||||

|

||||

```markdown

|

||||

- Introduction

|

||||

- What is DNS?

|

||||

- Domain Name Service

|

||||

- Maps names to IP addresses

|

||||

- Sometimes it does other things, but we're not worrying about that today

|

||||

- Distributed system

|

||||

- Intended to have the same data everywhere in the world

|

||||

- It can take time for records to be usable from everywhere

|

||||

```

|

||||

|

||||

Then I will go in and start filling in the bullet tree with links and references

|

||||

to each major concept or other opinions that people have had about the topic.

|

||||

For example:

|

||||

|

||||

```markdown

|

||||

- Introduction

|

||||

- What is DNS?

|

||||

- Domain Name Service

|

||||

- https://datatracker.ietf.org/doc/html/rfc1035

|

||||

- Maps names to IP addresses

|

||||

- Sometimes it does other things, but we're not worrying about that today

|

||||

- Distributed system

|

||||

- Intended to have the same data everywhere in the world

|

||||

- It can take time for records to be usable from everywhere

|

||||

- https://jvns.ca/blog/2021/12/06/dns-doesn-t-propagate/

|

||||

```

|

||||

|

||||

These help me write about the topic and give me links to add to the post so that

|

||||

people can understand more if they want to. You should spend most of your time

|

||||

writing the outline. The rest is really just restating the outline in sentences.

|

||||

|

||||

## Writing The Post

|

||||

|

||||

After each top level item is fleshed out enough, I usually pick somewhere to

|

||||

start and add some space after a top level item. Then I just start writing. Each

|

||||

top level item usually maps to a few paragraphs / a section of the post. I

|

||||

usually like to have each section have its own little goal / context to it so

|

||||

that readers start out from not understanding something and end up understanding

|

||||

it better. Here's an example:

|

||||

|

||||

> If you have used a computer in the last few decades or so, you have probably

|

||||

> used the Domain Name Service (DNS). DNS maps human-readable names (like

|

||||

> `google.com`) to machine-readable IP addresses (like `182.48.247.12`). Because

|

||||

> of this, DNS is one of the bedrock protocols of the modern internet and it

|

||||

> usually is the cause of most failures in big companies.

|

||||

>

|

||||

> DNS is a globally distributed system without any authentication or way to

|

||||

> ensure that only authorized parties can query IP addresses for specific domain

|

||||

> names. As a consequence of this, this means that anyone can get the IP address

|

||||

> of a given server if they have the DNS name for it. This also means that

|

||||

> updating a DNS record can take a nontrivial amount of time to be visible from

|

||||

> everywhere in the world.

|

||||

>

|

||||

> Instead of using public DNS records for internal services, you can set up a

|

||||

> split DNS configuration so that you run an internal DNS server that has your

|

||||

> internal service IP addresses obscured away from the public internet. This

|

||||

> means that attackers can't get their hands on the IP addresses of your

|

||||

> services so that they are harder to attack. In this article, I'm going to

|

||||

> spell out how this works, the advantages of this setup, the tradeoffs made in

|

||||

> the process and how you can implement something like this for yourself.

|

||||

|

||||

In the process of writing, I will find gaps in the outline and just fix it by

|

||||

writing more words than the outline suggested. This is okay, and somewhat

|

||||

normal. Go with the flow.

|

||||

|

||||

I expand each major thing into its component paragraphs and will break things up

|

||||

into sections with markdown headers if there is a huge change in topics. Adding

|

||||

section breaks can also help people stay engaged with the post. Giant walls of

|

||||

text are hard to read and can make people lose focus easily.

|

||||

|

||||

Another trick I use to avoid my posts being giant walls of text is what I call

|

||||

"conversation snippets". These look like this:

|

||||

|

||||

<xeblog-conv name="Mara" mood="hacker">These are words and I am saying

|

||||

them!</xeblog-conv>

|

||||

|

||||

I use them for both creating [Socratic

|

||||

dialogue](https://en.wikipedia.org/wiki/Socratic_dialogue) and to add prose

|

||||

flair to my writing. I am more of a prose writer [by

|

||||

nature](https://xeiaso.net/blog/the-oasis), and I find that this mix allows me

|

||||

to keep both technical and artistic writing up to snuff.

|

||||

|

||||

<xeblog-conv name="Cadey" mood="enby">Amusingly, I get asked if the characters

|

||||

in my blog are separate people all giving their input into things. They are

|

||||

characters, nothing more. If you ever got an impression otherwise, then I have

|

||||

done my job as a writer _incredibly well_.</xeblog-conv>

|

||||

|

||||

Just flesh things out and progressively delete parts of the outline as you go.

|

||||

It gets easier.

|

||||

|

||||

### Writing The Conclusion

|

||||

|

||||

I have to admit, I really suck at writing conclusions. They are annoying for me

|

||||

to write because I usually don't know what to put there. Sometimes I won't even

|

||||

write a conclusion at all and just end the article there. This doesn't always

|

||||

work though.

|

||||

|

||||

A lot of the time when I am describing how to do things I will end the article

|

||||

with a "call to action". This is a few sentences that encourages the reader to

|

||||

try the thing that I've been writing about out for themselves. If I was turning

|

||||

that split DNS article from earlier into a full article, the conclusion

|

||||

could look something like this:

|

||||

|

||||

> ---

|

||||

>

|

||||

> If you want an easy way to try out a split DNS configuration, install

|

||||

> [Tailscale](https://tailscale.com/) on a couple virtual machines and enable

|

||||

> [MagicDNS](https://tailscale.com/kb/1081/magicdns/). This will set up a split

|

||||

> DNS configuration with a domain that won't resolve globally, such as

|

||||

> `hostname.example.com.beta.tailscale.net`, or just `hostname` for short.

|

||||

>

|

||||

> I use this in my own infrastructure constantly. It has gotten to the point

|

||||

> where I regularly forget that Tailscale is involved at all, and become

|

||||

> surprised when I can't just access machines by name.

|

||||

>

|

||||

> A split DNS setup isn't a security feature (if anything, it's more of an

|

||||

> obscurity feature), but you can use it to help administrate your systems by

|

||||

> making your life easier. You can update records on your own schedule and you

|

||||

> don't have to worry about outside attackers getting the IP addresses of your

|

||||

> services.

|

||||

|

||||

I don't like giving the conclusion a heading, so I'll usually use a [horizontal

|

||||

rule (`---` or `<hr

|

||||

/>`)](https://www.coffeecup.com/help/articles/what-is-a-horizontal-rule/) to

|

||||

break it off.

|

||||

|

||||

---

|

||||

|

||||

This is how I write about things. Do you have a topic in mind that you have

|

||||

wanted to write about for a while? Try this system out! If you get something

|

||||

that you like and want feedback on how to make it shine, email me at

|

||||

`iwroteanarticle at xeserv dot us` with either a link to it or the draft

|

||||

somehow. I'll be sure to read it and reply back with both what I liked and some

|

||||

advice on how to make it even better.

|

||||

|

|

@ -82,7 +82,7 @@ terrible idea. Microservices architectures are not planned. They are an

|

|||

evolutionary result, not a fully anticipated feature.

|

||||

|

||||

Finally, don’t “design for the future”. The future [hasn’t happened

|

||||

yet](https://christine.website/blog/all-there-is-is-now-2019-05-25). Nobody

|

||||

yet](https://xeiaso.net/blog/all-there-is-is-now-2019-05-25). Nobody

|

||||

knows how it’s going to turn out. The future is going to happen, and you can

|

||||

either adapt to it as it happens in the Now or fail to. Don’t make things overly

|

||||

modular, that leads to insane things like dynamically linking parts of an

|

||||

|

|

|

|||

|

|

@ -279,7 +279,7 @@ step.

|

|||

The deploy step does two small things. First, it installs

|

||||

[dhall-yaml](https://github.com/dhall-lang/dhall-haskell/tree/master/dhall-yaml)

|

||||

for generating the Kubernetes manifest (see

|

||||

[here](https://christine.website/blog/dhall-kubernetes-2020-01-25)) and then

|

||||

[here](https://xeiaso.net/blog/dhall-kubernetes-2020-01-25)) and then

|

||||

runs

|

||||

[`scripts/release.sh`](https://tulpa.dev/cadey/printerfacts/src/branch/master/scripts/release.sh):

|

||||

|

||||

|

|

|

|||

|

|

@ -67,7 +67,7 @@ Hopefully Valve can improve the state of VR on Linux with the "deckard".

|

|||

|

||||

2021 has had some banger releases. Halo Infinite finally dropped. Final Fantasy

|

||||

7 Remake came to PC. [Metroid

|

||||

Dread](https://christine.website/blog/metroid-dread-review-2021-10-10) finally

|

||||

Dread](https://xeiaso.net/blog/metroid-dread-review-2021-10-10) finally

|

||||

came out after being rumored for more than half of my lifetime. Forza Horizon 5

|

||||

raced out into the hearts of millions. Overall, it was a pretty good year to be

|

||||

a gamer.

|

||||

|

|

|

|||

|

|

@ -0,0 +1,95 @@

|

|||

---

|

||||

title: "Fly.io: the Reclaimer of Heroku's Magic"

|

||||

date: 2022-05-15

|

||||

tags:

|

||||

- flyio

|

||||

- heroku

|

||||

vod:

|

||||

twitch: https://www.twitch.tv/videos/1484123245

|

||||

youtube: https://youtu.be/BAgzkKpLVt4

|

||||

---

|

||||

|

||||

Heroku was catalytic to my career. It's been hard to watch the fall from grace.

|

||||

Don't get me wrong, Heroku still _works_, but it's obviously been in maintenance

|

||||

mode for years. When I worked there, there was a goal that just kind of grew in

|

||||

scope over and over without reaching an end state: the Dogwood stack.

|

||||

|

||||

In Heroku each "stack" is the substrate the dynos run on. It encompasses the AWS

|

||||

runtime, the HTTP router, the logging pipeline and a bunch of the other

|

||||

infrastructure like the slug builder and the deployment infrastructure. The

|

||||

three stacks Heroku has used are named after trees: Aspen, Bamboo and Cedar.

|

||||

Every Heroku app today runs on the Cedar stack, and compared to Bamboo it was a

|

||||

generational leap in capability. Cedar was what introduced buildpacks and

|

||||

support for any language under the sun. Prior stacks railroaded you into Ruby on

|

||||

Rails (Heroku used to be a web IDE for making Rails apps). However there were

|

||||

always plans to improve with another generational leap. This ended up being

|

||||

called the "Dogwood stack", but Dogwood never totally materialized because it

|

||||

was too ambitious for Heroku to handle post-acquisition. Parts of Dogwood's

|

||||

roadmap ended up being used in the implementation of Private Spaces, but as a

|

||||

whole I don't expect Dogwood to materialize in Heroku in the way we all had

|

||||

hoped.

|

||||

|

||||

However, I can confidently say that [fly.io](https://fly.io) seems like a viable

|

||||

inheritor of the mantle of responsibility that Heroku has left into the hands of

|

||||

the cloud. fly.io is a Platform-as-a-Service that hosts your applications on top

|

||||

of physical dedicated servers run all over the world instead of being a reseller

|

||||

of AWS. This allows them to get your app running in multiple regions for a lot

|

||||

less than it would cost to run it on Heroku. They also use anycasting to allow

|

||||

your app to use the same IP address globally. The internet itself will load

|

||||

balance users to the nearest instance using BGP as the load balancing

|

||||

substrate.

|

||||

|

||||

<xeblog-conv name="Cadey" mood="enby">People have been asking me what I would

|

||||

suggest using instead of Heroku. I have been unable to give a good option until

|

||||

now. If you are dissatisfied with the neglect of Heroku in the wake of the

|

||||

Salesforce acquisition, take a look at fly.io. Its free tier is super generous.

|

||||

I worked at Heroku and I am beyond satisfied with it. I'm considering using it

|

||||

for hosting some personal services that don't need something like

|

||||

NixOS.</xeblog-conv>

|

||||

|

||||

Applications can be built either using [cloud native

|

||||

buildpacks](https://fly.io/docs/reference/builders/), Dockerfiles or arbitrary

|

||||

docker images that you generated with something like Nix's

|

||||

`pkgs.dockerTools.buildLayeredImage`. This gives you freedom to do whatever you

|

||||

want like the Cedar stack, but at a fraction of the cost. Its default instance

|

||||

size is likely good enough to run the blog you are reading right now and would

|

||||

be able to do that for $2 a month plus bandwidth costs (I'd probably estimate

|

||||

that to be about $3-5, depending on how many times I get on the front page of

|

||||

Hacker News).

|

||||

|

||||

You can have persistent storage in the form of volumes, poke the internal DNS

|

||||

server fly.io uses for service discovery, run apps that use arbitrary TCP/UDP

|

||||

ports (even a DNS server!), connect to your internal network over WireGuard, ssh

|

||||

into your containers, and import Heroku apps into fly.io without having to

|

||||

rebuild them. This is what the Dogwood stack should have been. This represents a

|

||||

generational leap in the capabilities of what a Platform as a Service can do.

|

||||

|

||||

The stream VOD in the footer of this post contains my first impressions using

|

||||

fly.io to try and deploy an app written with [Deno](https://deno.land) to the

|

||||

cloud. I ended up creating a terrible CRUD app on stream using SQLite that

|

||||

worked perfectly beyond expectations. I was able to _restart the app_ and my

|

||||

SQLite database didn't get blown away. I could easily imagine myself combining

|

||||

something like [litestream](https://litestream.io) into my docker images to

|

||||

automate offsite backups of SQLite databases like this. It was magical.

|

||||

|

||||

<xeblog-conv name="Mara" mood="happy">If you've never really used Heroku, for

|

||||

context each dyno has a mutable filesystem. However that filesystem gets blown

|

||||

away every time a dyno reboots. Having something that is mutable and persistent

|

||||

is mind-blowing.</xeblog-conv>

|

||||

|

||||

Everything else you expect out of Heroku works like you'd expect in fly.io. The

|

||||

only things I can see missing are automated Redis hosting by the platform

|

||||

(however this seems intentional as fly.io is generic enough [to just run redis

|

||||

directly for you](https://fly.io/docs/reference/redis/)) and the marketplace.

|

||||

The marketplace being absent is super reasonable, seeing as Heroku's marketplace

|

||||

only really started existing as a result of them being the main game in town

|

||||

with all the mindshare. fly.io is a voice among a chorus, so it's understandable

|

||||

that it wouldn't have the same treatment.

|

||||

|

||||

Overall, I would rate fly.io as a worthy inheritor of Heroku's mantle as the

|

||||

platform as a service that is just _magic_. It Just Works™️. There was no

|

||||

fighting it at a platform level, it just worked. Give it a try.

|

||||

|

||||

<xeblog-conv name="Cadey" mood="enby">Don't worry

|

||||

[@tqbf](https://twitter.com/tqbf), fly.io put in a good showing. I still wanna

|

||||

meet you at some conference.</xeblog-conv>

|

||||

|

|

@ -8,7 +8,7 @@ series: conlangs

|

|||

|

||||

`h` is a conlang project that I have been working off and on for years. It is infinitely simply teachable, trivial to master and can be used to represent the entire scope of all meaning in any facet of the word. All with a single character.

|

||||

|

||||

This is a continuation from [this post](https://christine.website/blog/the-origin-of-h-2015-12-14). If this post makes sense to you, please let me know and/or schedule a psychologist appointment just to be safe.

|

||||

This is a continuation from [this post](https://xeiaso.net/blog/the-origin-of-h-2015-12-14). If this post makes sense to you, please let me know and/or schedule a psychologist appointment just to be safe.

|

||||

|

||||

## Phonology

|

||||

|

||||

|

|

|

|||

|

|

@ -363,14 +363,14 @@ my blog's [JSONFeed](/blog.json):

|

|||

#!/usr/bin/env bash

|

||||

# xeblog-post.sh

|

||||

|

||||

curl -s https://christine.website/blog.json | jq -r '.items[0] | "\(.title) \(.url)"'

|

||||

curl -s https://xeiaso.net/blog.json | jq -r '.items[0] | "\(.title) \(.url)"'

|

||||

```

|

||||

|

||||

At the time of writing this post, here is the output I get from this command:

|

||||

|

||||

```

|

||||

$ ./xeblog-post.sh

|

||||

Anbernic RG280M Review https://christine.website/blog/rg280m-review

|

||||

Anbernic RG280M Review https://xeiaso.net/blog/rg280m-review

|

||||

```

|

||||

|

||||

What else could you do with pipes and redirection? The cloud's the limit!

|

||||

|

|

|

|||

|

|

@ -16,7 +16,7 @@ it. This is a sort of spiritual successor to my old

|

|||

ecosystem since then, as well as my understanding of the language.

|

||||

|

||||

[go]: https://golang.org

|

||||

[gswg]: https://christine.website/blog/getting-started-with-go-2015-01-28

|

||||

[gswg]: https://xeiaso.net/blog/getting-started-with-go-2015-01-28

|

||||

|

||||

Like always, feedback is very welcome. Any feedback I get will be used to help

|

||||

make this book even better.

|

||||

|

|

|

|||

|

|

@ -0,0 +1,565 @@

|

|||

---

|

||||

title: Crimes with Go Generics

|

||||

date: 2022-04-24

|

||||

tags:

|

||||

- cursed

|

||||

- golang

|

||||

- generics

|

||||

vod:

|

||||

twitch: https://www.twitch.tv/videos/1465727432

|

||||

youtube: https://youtu.be/UiJtaKYQnzg

|

||||

---

|

||||

|

||||

Go 1.18 added [generics](https://go.dev/doc/tutorial/generics) to the

|

||||

language. This allows you to have your types take types as parameters

|

||||

so that you can create composite types (types out of types). This lets

|

||||

you get a lot of expressivity and clarity about how you use Go.

|

||||

|

||||

However, if you are looking for good ideas on how to use Go generics,

|

||||

this is not the post for you. This is full of bad ideas. This post is

|

||||

full of ways that you should not use Go generics in production. Do not

|

||||

copy the examples in this post into production. By reading this post

|

||||

you agree to not copy the examples in this post into production.

|

||||

|

||||

I have put my code for this article [on my git

|

||||

server](https://tulpa.dev/internal/gonads). This repo has been

|

||||

intentionally designed to be difficult to use in production by me

|

||||

taking the following steps:

|

||||

|

||||

1. I have created it under a Gitea organization named `internal`. This

|

||||

will make it impossible for you to import the package unless you

|

||||

are using it from a repo on my Gitea server. Signups are disabled

|

||||

on that Gitea server. See

|

||||

[here](https://go.dev/doc/go1.4#internalpackages) for more

|

||||

information about the internal package rule.

|

||||

1. The package documentation contains a magic comment that will make

|

||||

Staticcheck and other linters complain that you are using this

|

||||

package even though it is deprecated.

|

||||

|

||||

<xeblog-conv name="Mara" mood="hmm">What is that package

|

||||

name?</xeblog-conv>

|

||||

|

||||

<xeblog-conv name="Cadey" mood="enby">It's a reference to

|

||||

Haskell's monads, but adapted to Go as a pun.</xeblog-conv>

|

||||

|

||||

<xeblog-conv name="Numa" mood="delet">A gonad is just a gonoid in the

|

||||

category of endgofunctors. What's there to be confused

|

||||

about?</xeblog-conv>

|

||||

|

||||

<xeblog-conv name="Cadey" mood="facepalm">\*sigh\*</xeblog-conv>

|

||||

|

||||

## `Queue[T]`

|

||||

|

||||

To start things out, let's show off a problem in computer science that

|

||||

is normally difficult. Let's make a MPMS (multiple producer, multiple

|

||||

subscriber) queue.

|

||||

|

||||

First we are going to need a struct to wrap everything around. It will

|

||||

look like this:

|

||||

|

||||

```go

|

||||

type Queue[T any] struct {

|

||||

data chan T

|

||||

}

|

||||

```

|

||||

|

||||

This creates a type named `Queue` that takes a type argument `T`. This

|

||||

`T` can be absolutely anything, but the only requirement is that the

|

||||

data is a Go type.

|

||||

|

||||

You can create a little constructor for `Queue` instances with a

|

||||

function like this:

|

||||

|

||||

```go

|

||||

func NewQueue[T any](size int) Queue[T] {

|

||||

return Queue[T]{

|

||||

data: make(chan T, size),

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Now let's make some methods on the `Queue` struct that will let us

|

||||

push to the queue and pop from the queue. They could look like this:

|

||||

|

||||

```go

|

||||

func (q Queue[T]) Push(val T) {

|

||||

q.data <- val

|

||||

}

|

||||

|

||||

func (q Queue[T]) Pop() T {

|

||||

return <-q.data

|

||||

}

|

||||

```

|

||||

|

||||

These methods will let you put data at the end of the queue and then

|

||||

pull it out from the beginning. You can use them like this:

|

||||

|

||||

```go

|

||||

q := NewQueue[string](5)

|

||||

q.Push("hi there")

|

||||

str := q.Pop()

|

||||

if str != "hi there" {

|

||||

panic("string is wrong")

|

||||

}

|

||||

```

|

||||

|

||||

This is good, but the main problem comes from trying to pop from an

|

||||

empty queue. It'll stay there forever doing nothing. We can use the

|

||||

`select` statement to allow us to write a nonblocking version of the

|

||||

`Pop` function:

|

||||

|

||||

```go

|

||||

func (q Queue[T]) TryPop() (T, bool) {

|

||||

select {

|

||||

case val := <-q.data:

|

||||

return val, true

|

||||

default:

|

||||

return nil, false

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

However when we try to compile this, we get an error:

|

||||

|

||||

```

|

||||

cannot use nil as T value in return statement

|

||||

```

|

||||

|

||||

In that code, `T` can be _anything_, including values that may not be

|

||||

able to be `nil`. We can work around this by taking advantage of the

|

||||

`var` statement, which makes a new variable and initializes it to the

|

||||

zero value of that type:

|

||||

|

||||

```go

|

||||

func Zero[T any]() T {

|

||||

var zero T

|

||||

return zero

|

||||

}

|

||||

```

|

||||

|

||||

When we run the `Zero` function like

|

||||

[this](https://go.dev/play/p/Z5tRs1-aKBU):

|

||||

|

||||

```go

|

||||

log.Printf("%q", Zero[string]())

|

||||

log.Printf("%v", Zero[int]())

|

||||

```

|

||||

|

||||

We get output that looks like this:

|

||||

|

||||

```

|

||||

2009/11/10 23:00:00 ""

|

||||

2009/11/10 23:00:00 0

|

||||

```

|

||||

|

||||

So we can adapt the `default` branch of `TryPop` to this:

|

||||

|

||||

```go

|

||||

func (q Queue[T]) TryPop() (T, bool) {

|

||||

select {

|

||||

case val := <-q.data:

|

||||

return val, true

|

||||

default:

|

||||

var zero T

|

||||

return zero, false

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

And finally write a test for good measure:

|

||||

|

||||

```go

|

||||

func TestQueue(t *testing.T) {

|

||||

q := NewQueue[int](5)

|

||||

for i := range make([]struct{}, 5) {

|

||||

q.Push(i)

|

||||

}

|

||||

|

||||

for range make([]struct{}, 5) {

|

||||

t.Log(q.Pop())

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

## `Option[T]`

|

||||

|

||||

In Go, people use pointer values for a number of reasons:

|

||||

|

||||

1. A pointer value may be `nil`, so this can signal that the value may

|

||||

not exist.

|

||||

1. A pointer value only stores the offset in memory, so passing around

|

||||

the value causes Go to only copy the pointer instead of copying the

|

||||

value being passed around.

|

||||

1. A pointer value being passed to a function lets you mutate values

|

||||

in the value being passed. Otherwise Go will copy the value and you

|

||||

can mutate it all you want, but the changes you made will not

|

||||

persist past that function call. You can sort of consider this to

|

||||

be "immutable", but it's not as strict as something like passing

|

||||

`&mut T` to functions in Rust.

|

||||

|

||||

This `Option[T]` type will help us model the first kind of constraint:

|

||||

a value that may not exist. We can define it like this:

|

||||

|

||||

```go

|

||||

type Option[T any] struct {

|

||||

val *T

|

||||

}

|

||||

```

|

||||

|

||||

Then you can define a couple methods to use this container:

|

||||

|

||||

```go

|

||||

var ErrOptionIsNone = errors.New("gonads: Option[T] has no value")

|

||||

|

||||

func (o Option[T]) Take() (T, error) {

|

||||

if o.IsNone() {

|

||||

var zero T

|

||||

return zero, ErrOptionIsNone

|

||||